Computational Photography: The photographic future

The physical limits of the cameras are complemented by the image processing technologies of the devices. Thanks to these technologies, photographers can expand our range of possibilities when making a capture. However, traditional processes change with technological advances. And maybe it's time to understand that photography is evolving and so are we .

An image created by ones and zeros

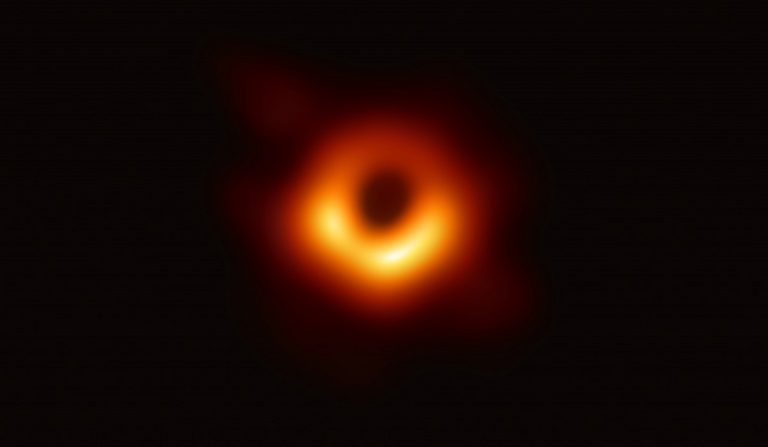

Perhaps, the most important image captured in the history of mankind in recent years is that captured by the Event Horizon Telescope (EHT). This network of radio telescopes had to be synchronized very precisely to get the radiation emitted around the black hole and thus get that mass that represents the shadow of the black hole. It is the most anticipated photograph in the universe.

Source

And the key word in these processes is 'represent'. It is not an optical image that we are capturing in that shot . It is radiation that stimulates a series of electronic devices, which go through an image processing system whose code occupies half a ton of hard drives and produces an image of 7416 x 4320 pixels at 16 bits deep. It is a process that if we had tried to capture with an optical image, we should have built a telescope the size of the earth.

Also, while our photographic systems are still mostly optical, the role of computing is growing. The color of the sensors is not a mixture and special filtering of silver halides that are revealed in a chemical, it is a study done by each producing house so that the camera computer determines that it stimulates a green pixel and a red pixel at the same Time produces a yellow color. Our developers have image processing versions that allow to reduce noise more, recover more information and adjust colors better. We work in a workflow fueled by the interpretation of the machines .

In a study he does on computational photography , Vasily Zubarev mentions that Marc Levoy, pioneer of computational imaging, defines these processes as “ techniques that improve or extend the capabilities of digital photography in which the result is an ordinary photograph; however one that could not have been captured with a traditional camera . ” This is where the physical limits of the cameras end and the algorithms and computing come in .

Source

In the world of mobile photography it is much more visible : filters replace or accelerate our need to edit images; compensating for elements such as sharpness, shadow control, high light control, color improvement and even improving our skins on selfies. Apple currently has a mobile phone capable of illuminating the scene and making it feel like a studio image: it takes information represented in a shot, and modifies it to resignify its context and its properties; and we continue to give it the value of a photograph.

When we open our camera, the mobile is taking a thousand images and they enter the cycle of image information recycling. By 'shutting up', what we are doing is telling you to give us the last image of that cycle. But the camera is constantly working on getting the information from the stack of captures it is processing, where the layers are divided into white balance, focus, noise reduction, tone map, light map, highlights, highlights shadow, face detection, geolocation metadata detection, structure, exposure and segmentation: at least 12 photographs are being processed in milliseconds to produce a single image, which will then be part of a row of images in a buffer cycle so that one of those is selected to upload to the social media.

What we cannot achieve in a single image with traditional photography is a common process for modern digital photography .

Networks and more networks

The near future is artificial intelligence, as we have spoken on previous occasions . Neural networks are changing our perception of the entire photographic process , from capture to development. An example of this is the implementation of AI in modern developers such as Adobe with Sensei applied to Camera Raw and Skylum with its Luminar platform .

At the official level, we are choosing scenes. However, light, color and the same structure is an interpretation of values that we put to a computer to process. Each time we add one more process in the workflow, more machines intervene in that representation, which we control to some extent .

Source

The reality we capture is not so real and will always have an influence behind what brands have put to interpret the computer of their devices. But, what we do on an artistic level is to choose the distribution of light and color in the scene, create narrative and establish a stylistic pattern ; So computing is something secondary.

There is a danger around who is not able to leave the tradition and accept and adopt that the future is in those little squares that transform reality into ones and zeros. At this time we must understand technology and adopt it within our workflows . As she evolves very fast and letting us through can be the end of our careers.

This post has received a 25.82 % upvote from @boomerang.