An ML Pipeline for Hand Tracking and Gesture Recognition

Source

Nowadays, there are many implementations of the posture tracking algorithm, and even many of them open source, but almost all require great computing power and are not applicable in mobile devices.

But this can change thanks to an algorithm created by Google, which allows you to track hand movements with a mobile phone.

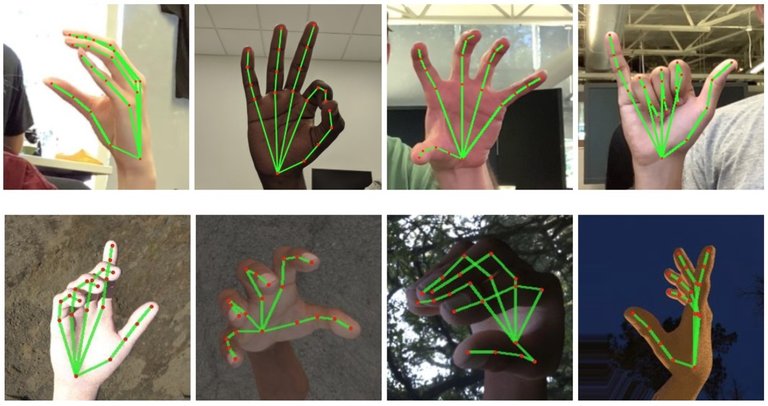

To achieve this, the algorithm creates a hand model that consists of 21 points, which are sufficient to have a relatively accurate description of the hand position at each moment.

When you have a one-hand model, the algorithm analyzes the angles between the parts of the fingers and compares them to the basis of the gestures it knows.

This application could be ideal to implement with it the sign language, if you are experts in programming and you want to deepen more, you have the code available in Gitthub.

Source: ai.googleblog.com, read original article

https://ai.googleblog.com/2019/08/on-device-real-time-hand-tracking-with.html

Versión en español