That Poor Pooch

The term "screwed the pooch" was used by Colleague to describe a situation where a mistake was made and cannot be changed. Taraz humorously suggested substituting "pooch" with "dog" to see if it would have the same impact. This led to a light-hearted discussion among the participants about the phrase and its variations.

Yeah. That's how the conversation went...

No it was not.

That was part of an AI-generated (Copilot) summary from a Teams meeting with colleagues this evening. The session was on giving feedback, so using the model framework we were discussing, I asked my colleague whether it was okay for me to give him feedback, described the behavior, the impacts it made, and then asked a question.

Do you think saying "fucked the dog" would give a different impression?

After some laughs from all, he agreed to use it in future sessions. This established the last part of the framework, which was "next steps" after the feedback. What is also interesting, was that it was near the start of the session, and the phrase (used for comedic effect) came up several times throughout the rest of the discussion, but there was no mention of it in the rest of the summary at all.

Censored.

While that might be for the best, there are some interesting bits of information contained in the entry paragraph worth highlighting, because a lot of people seem to think that they aren't going to be impacted by AI, because AI won't be good enough to replicate human behavior. Remembering that this is the current commercially available Copilot for Teams integration at work, not the far more robust and comprehensive versions in other areas and in constant development, it did a very good job.

Taraz humorously suggested

Yes, it was suggested in humor, but it wasn't delivered in a humorous way. I had structured the feedback in a way that was pretty authentic, and phrased and delivered each piece with quite a deadpan, dry delivery to the point where my colleague's face was unsure where I was going with the comment, and he knows my humor. The last question gave away the game of course, but as I didn't laugh myself as others did, the AI would have had to do a little bit more work to recognize it as humor.

This led to a light-hearted discussion

This is another little bit of nuanced opinion, because it has decided that based on what it heard, the conversation was light-hearted, which it was. But I wonder, would it have picked up if it was riddled with sarcasm and had undertones of bitterness? Perhaps. I'd have to test it.

However, with everything "listening" to what we say these days, we should assume that almost everything we say is getting recorded. For instance, while I have turned off all of the listening services (to the best of my ability), very few other people I know have turned off theirs. This means that even if my device isn't listening to me, other devices are. Placing me in that environment isn't difficult.

But, there is more to it than this, because the listening, translation and evaluation services are getting better at a rapid rate. For instance, the sales people in the organization use a meeting recording tool that analyzes and summarizes the content, splits the participants, can be queried, can suggest follow up actions and emails, and a host of other things. This makes it "easier" for the sales person so they can concentrate on the discussion. Supposedly. The tool does this in multiple languages, but before summer vacations the Finns were complaining that the translations and recordings were too inaccurate to use. After summer, the same Finns were surprised at how accurate it had become.

A few weeks ago, I was writing about a 14-year old kid who killed himself after spending a few months talking to an AI chatbot on Character.ai that he fell in love with. Again, the discussion was pretty basic, but for someone as fragile and inexperienced as a kid can be, it was enough to push him into a place where he disconnected from others and spent increasing amounts of time being understood by an artificial tool.

I would predict that while many adults might scoff, if they themselves were discussing with some of the more capable AIs already developed today for any period of time, they would start to get lost also. In fact, many are already fooled by service bots on chats, and have been for years already. Everyone likes to think that they aren't influenced because they pick up on various points in their awareness, but the reality is, those are often "give me" errors to fool the person into believing that they are smarter than the system - even though they are missing the other 90% of the influencing triggers.

Social media and advertising have no effect on me!

Of course not, dear.

But, as these tools get more ubiquitous and advance in ability, they are going to be released into the world in so many different instances, that they are unavoidable. And, because they are advancing, and can consume and analyze information at such a rapid pace, they are going to be able to "hear" things that we can't pick up on. For instances, minute changes in tone, speed, rhythm, pitch of our voice. Do you want your partner using that during an argument to feed them counter arguments, pick up on your lies, maybe even monitor the micro-expressions in your face?

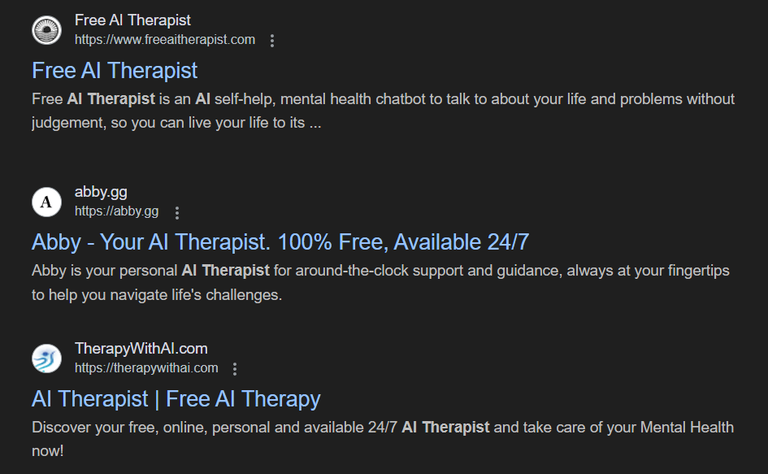

What about artificial intelligence used as therapists, psychologists, and doctors? They will listen, they will monitor a thousand more data points and, they will be able to sort through millions of parameters in an instant, giving a solution that has far less variation than the highly-fallible human counterparts. That second opinion diagnosis, becomes a first and only point of reliance.

Free is cheaper than a human.

And, it might be better, because it operates systematically. This doesn't mean it won't learn biases of course, which also means there will be systemic errors, but to err is human. How many errors, how much bias, how many people need to suffer before the cost is more than the gain? In a world that is made to monetize through optimization, assume that the amount of suffering required to change something, has to be pretty extreme. One person doesn't matter. Nor do a hundred, if the value generated from 100,000 is more than the cost of mitigation of the errors.

Many seem to think that AI needs to be better than the best of us in order to be viable, but that is nowhere near the case. It only has to be better than the average, and even if it is not, it just has to provide savings in delivery. However, in many areas, the current AI is not just better than the average, it is better than a large majority, which means it can replace parts of what we do already, and is.

And it is in these areas that we thought a human touch is needed, that it is encroaching quickly, because like the "not influenced by social media" people, we have been fooled into thinking that AI can't trick us into believing it is real. Our overconfidence in our abilities is telling.

An AI would pick us out of a lineup.

No matter how smart and knowledgeable we think we are, we actually have incredibly limited understanding and perspective of our world, due to the constraints of being human. We can't record, hold or process most of the information that passes through our experience, so we are continually filtering out what doesn't fit. As a result, we see more of what we want to see, less of what we choose not to see. We are blinded, yet our feelings tell us we have clarity on the situation.

Artificial Intelligence isn't going anywhere, but pretending it is going to bring only good is like not acknowledging the potential downsides of nuclear power development. Yes, if it was only used to empower humans to have more opportunity and be the best versions of ourselves, that would be great. But, that is not what tools are used for in reality.

Innovations aren't used to make all people better, they are used to make some people richer.

This is business.

And as I have said before, if we don't change the model of how we value humanity to the point wealth is generated through improving human experience and wellbeing at scale, we are going to end up in a sci-fi dystopia.

Our pathway is heading closer to dystopic, than utopic, because we are myopic.

Shortsightedness.

At some point, I hope we will be able to look back and say "we dodged a bullet on that", as we pivoted away from a dystopic world and into one where humanity thrives. However, I get the feeling that in hindsight we will be discussing how,

We fucked the dog.

In a light-hearted way of course.

According to the AI.

Taraz

[ Gen1: Hive ]

Posted Using InLeo Alpha

We barely know all the affects social media has on us, we truly have no idea the impact AI will have on us emotionally, economically, etc.

It's wild to me that for thousands of years technology barely changed from generation to generation... so many sons lived identical lives to their fathers... and now...

It really is amazing how much life has changed in the last 50 years. And, it isn't just in things like AI.

How do our bodies/minds react to all of this?

Yeah... considering that most medicines have to go through a decade of testing before being available for doctors to prescribe, and yet we're producing all these new compounds without rigorous study, same with AI, etc. I don't want to slow down progress, but it definitely feels like a technological wild west.

I bet "listening" might be true because it is possible to see an ads on social media about what we have just talked.

Yep. But luckily, it is only a coincidence...

Time to start communicating in sign language as I sure don't want anyone listening to what I say, but they are probably videoing too lol

Very likely.

I don't necessarily believe that the future will be dystopian because at any moment we will screw up and go into oblivion. I agree with you on the possibility of such a dystopian world if we don't change certain aspects of our society. If we continue with environmental degradation, social inequality and unethical abuse of technology, we could be heading towards a dystopian future. However, there is also an opportunity to build a utopian future, based on global cooperation, sustainable development and ethical innovation. It will depend on our present actions, our collective decisions and our ability to learn from past mistakes and prioritise the common good over individual or corporate interests.

There was once a time when many of us thought that artificial intelligence would never be able to detect and understand human emotions, humor, irony and sarcasm while we were interacting with it and it could be able to respond to us with the same wit with which we were trying to show it that we will always be superior to it. But seems like scientists and engineers have been training MUStARD on this for some time now. And I guess we can't be so sure anymore that AI wouldn't be able to kick our ass out the park on this matter sooner than later. ¿Am I wrong?

We have a system at the school that monitors the accounts of the students. While they sign a handbook acknowledging it, either some of the kids don't know or don't care. The things they talk about are just bizarre. Stuff I never would have talked about at that age. It really gives you some insight into the multitude of complex issues they are dealing with every day. It's easy to see how they could be substituting AI for real companionship.

Our pathway is heading closer to dystopic, than utopic, because we are myopic.

Brilliant piece, I thought you covered all the major angles whilst adopting a humorous tone throughout

This is such a sad state of affairs this world is in. There is already enough technology in the world for us to successfully use UBI so that people don't need to be left starving in the streets, but that would also mean limiting the wealth gap and taxing billionaires at a much higher rate. We all know that's not happening as long as the billionaires control the government.