Ways of income for Engagement Project + Major code update ( with explanation ) to check the comment quality

Good evening to everyone , I hope you are doing good .

This post to tell you notify you the major changes that I made to the code in order to prevent copy pasted comments , very similar comments to be identified .

Before going into the code , I will like to mention the number of ways I am trying to raise the income for Engagement project.

All the tokens earned goes to the Engagement Project itself.

SPORTS

Currently @amr008.sports earns through -

- Curation

- Authoring " SPORTS TODAY " discussion thread daily

From today -

- Daily actifit post .

CTP

Currently @amr008.ctp earns through -

- Curation

- Authoring " Curation Report of Engagement Project " daily .

STEM and LEO

- Curation alone .

In near future -

- All the Hive earned from @amr008.sports and @amr008.ctp will be used to buy LEO and STEM to give a boost to engagement project.

If you have any suggestion what more can be added , please let me know in the comments .

Python code update - using FuzzyWuzzy - to prevent spammers be in top 25.

The main intention of the Engagement project is to reward those who are engaging with other and putting effort through engagement . It would be unfair if I just let some people who copy paste number of comment and surpass genuine users efforts .

Fuzzy Wuzzy

Fuzzy Wuzzy is a library which let's us find the similarity between two strings ( in laymen terms - two sentences ) . I have used this in my code to find similar comments -

How does it work? Example

from fuzzywuzzy import fuzz

from fuzzywuzzy import process

from fuzzywuzzy import fuzz

from fuzzywuzzy import process

s1="Thanks"

s2="Thanks buddy"

s3="Thanks a lot buddy"

compare=process.extract(s1,[s2,s3],scorer=fuzz.token_set_ratio)

print(compare)

Now I am comparing the first string s1 with s2 and s3 . The output is -

O/P = [('Thanks buddy', 100), ('Thanks a lot buddy', 100)]

This means , the s1 is actually in s2 and s3 . There is a 100% similarity between s1 , s2 and s3.

Example 2-

s1="Thanks a lot "

s2="Thanks buddy"

s3="Thanks a lot buddy"

compare=process.extract(s1,[s2,s3],scorer=fuzz.token_set_ratio)

O/P = s1 is - [('Thanks a lot buddy', 100), ('Thanks buddy', 67)]

This means s1 which is "Thanks a lot" is 100% similar to "Thanks a lot buddy " and 67% similar to "Thanks buddy" .

Let's take some real examples now -

@thatgermandude came forward and told me that he runs a lottery and talk to various authors with similar comments so he is getting an unfair advantage over others although the comments are similar .

His latest comments -

Thank you for participating! Today you had no luck...

Maybe you will do better in my next Not-a-Lottery

Thank you for participating! Today you had no luck...

Maybe you will do better in my next Not-a-Lottery

Thank you for participating! Today you had no luck...

Maybe you will do better in my next Not-a-Lottery

Thank you for participating! Today you had no luck...

Maybe you will do better in my next Not-a-Lottery

Thank you for participating! Today you had no luck...

Maybe you will do better in my next Not-a-Lottery

Thank you for participating! Today you had no luck...

Maybe you will do better in my next Not-a-Lottery

Thank you for participating! Today you had no luck...

Maybe you will do better in my next Not-a-Lottery

Are you sure you have the right LEO Token? I am talking about the LeoFinance Token on hive.

Thank you for participating! Today you had no luck...

Maybe you will do better in my next Not-a-Lottery

Thank you for participating! Today you had no luck...

Maybe you will do better in my next Not-a-Lottery

Thank you for participating! Today you had no luck...

Maybe you will do better in my next Not-a-Lottery

Thank you for participating! Today you had no luck...

Maybe you will do better in my next Not-a-Lottery

Thank you for participating! Today you had no luck...

Maybe you will do better in my next Not-a-Lottery

Thank you for participating! Today you had no luck...

Maybe you will do better in my next Not-a-Lottery

Thank you for participating! Today you had no luck...

Maybe you will do better in my next Not-a-Lottery

Thank you for participating! Today you had no luck...

Maybe you will do better in my next Not-a-Lottery

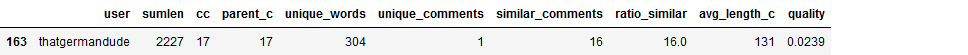

So when I applied the string comparison and ran test -

Observe the quality points which determines the rank , here it is - 0.0239

Without applying string comparison

He was actually in the top 25 today with comment quality of 2.2218 ( because of length of comment , number of comments , people talked to is high )

I would like to apologize to @thatgermandude for using his example here but its only because he was so honest and voluntarily came forward and told me about this I am using his example .

Prevents copy pasted comments , highly similar comments to get upvotes .

If you look at the above example @thatgermandude was at 18th rank but after I implemented the comment comparison , he is now in 163rd rank .

So if in future someone decides to take advantage by using a bot ? It will be very difficult to rank higher without manually answering the comments .

Which do you consider as similar comments ?

- I will compare your 1st comment with all other comments - if any of the other comments returns 60% or above match - it will be considered as similar comment and the count for similar comment goes up .

- Then I will move to 2nd comment and compare with 3rd to rest of the comments and similar process as 1st step until all the comments are done .

I arrived at 60% by manually checking a lot of various strings and taking samples out of real users comments .

Spam Alerts

I have also set alert in the code now using this logic -

- If a user has made 50% or more comments which are very similar to each other , the code will show up the name to me .

This is how I found out -

The code told me that @erarium has 100% similar comments and it was actually true -

you can check the latest comments here and see -

https://leofinance.io/@erarium/comments

Is this intentional ? Absolutely not , it is a curation project just like mine . It is not their job to tell me they will post same comments , it is my job to figure it out .

Ranking of @erarium before this code implementation - 11

Ranking of @erarium after this code implementation - 160

I wanted to do this for very long and I am very happy I got this working to some extent . This code will be used from tomorrow to rank and curate . This doesn't mean all the other factors don't matter anymore - ofcourse they do . Everything has its own weightage . It just became harder for non-quality comments to be at the top.

@abh12345 and @crokkon . What do you feel about this?

Regards,

MR.

Posted with STEMGeeks

Goodbye Top25 :,-)

Oh that's a little sad. You will have to make manual comments instead to each participant in the not-a-lottery xP

Lol , I am really sorry and I hope you didn't feel bad for using your example.

nah I'm totally fine with it. I just hope I can get my score back when I am actually one of the most engaging users.

I'm sure you will!

Posted Using LeoFinance Beta

You totally can because I have computed the Similar comment to Unique comments ratio .

Means - if you post 30 genuine comments and 10 similar comments , the former will over take the latter .

just because you used similar comments doesn't mean you won't be able to be in top 25 , it just means that you need to make unique comments too to reach there .

I hope you got what I am trying to say.

I get what you are saying and I hoped your solution would work this way. Thanks!

Posted Using LeoFinance Beta

You are most welcome . I will participate in the lottery too soon.

Posted Using LeoFinance Beta

We could try to branch out like LBI, SPI did with gaming accounts aswell. But not sure if there is any resources or time available to that.

Otherwise great updates! And remember if you do some listing of accounts, consider doing whitelists instead of blacklists. Blacklists tends to never end and never be enough!

Ah that will be too tough given the lack of time on my side. Maybe if someone add beneficiaries I will be happy lol

Yeah I figured that! You gonna need an entire team like those guys have :)

But if you decide to form any later on I'll definitly volounter!

For sure . I am just waiting to see how the first payout goes like how much APR can be afforded , how much can be powered up and stuff . Once all of them are in place maybe I will look beyond just this .

Posted Using LeoFinance Beta

Looks like you have a lot of great things in the works! Nice job! I am really impressed by the time and effort you have been putting into this. This is going to be such a great way to encourage engagement and help grow smaller accounts!

Posted Using LeoFinance Beta

Thank you @bozz :) It really feels good when the effort is appreciated . Will continue to make it better.

It could be if they know about it! We need to promote this even more so it's not just the big players trying to beat each other every day ;)

Posted Using LeoFinance Beta

I've been seeing a ton of posts about it. Next time I see a less technical and more promotional post I will send it along.

Posted Using LeoFinance Beta

Sounds good :)

Posted Using LeoFinance Beta

Thank you @bozz for the support.

Posted Using LeoFinance Beta

@amr008 and @anderssinho I am reblog-ing the post! Get some eyeballs on the content!

@amr008 Thank you. I am learning something new everyday! I didn't realize the complexity of "bot-ting" and the power of copy and paste! Does this apply only to text? The reason behind my question was that I am considering using a graphic after me reply's/posts as a way to watermark my comments/posts. etc..... Here is an example. I am using this graphic because I have posted the graphic in comments prior to this post.

Thank you again for your insight into the "engagement" process!

Hey @kingneptune . All the URL(http and https://) , IMG are removed before calculating the quality of comments .

So you can continue doing that , I don't think it will pose any problem.

@amr008 Thank you! That was a quick response! I am at 0523 PST in the world, how about yourself?

Its 7:06 PM over here :)

@amr008 It sounds like all of this work has created a thirst within you! Have a !BEER on @kingneptune

Since I have been giving away beer the sentence above has been a variation of my posts as well. Will that have an effect on my quality? I am not performing a copy and paste, however, I do often use some form of "Thirsty" variation within the post.

Lol , love the beer .

Regarding the beer sentence ,

there should be a match of 60% and above for the comments to be found similar.

Say your first post says - ! beer

Second post - you deserve a ! beer .

Are they considered similar ? Absolutely yes .

Consider this -

First comment - You did put a lot of effort , take your ! beer

Second comment - Man , this is something new to me . I definitely enjoyed the post - a ! beer for you

This isn't similar.

Think you can save that example, because I guess there will be more people asking as the projects grow ^^

Posted Using LeoFinance Beta

Sure I can put that example everytime I post something related to Engagement project.

Posted Using LeoFinance Beta

@amr008 Perfect! Thanks for the example and I have been changing it up and will continue to do so in the future. I was looking at your @amr008.sports blog as well. Are you part of the initiative with Sports.gov as well? I read a post for case for delegation. I have delegated to sports.gov and the other individual but am at a loss on finding who I have delegated to? Still new over here on the blockchain so some of it can be frustrating as well as engaging. LOL.

Oh I think it's better that you delegate to @amr008.sports if you want to help the engagement project. The sports.gov is acutally just the community fund account :)

@amr008 I have gone to both leodex and hive to look at delegation. That is not the issue. It only tells me how much I have delegated not to whom? I believe in your post you spoke about delegating to both @amr008.sports and @sports-gov as part of that project? Also the delegation was to be the sports token? Thanks! If so I am looking to delegate more sports talk tokens.

Here is how you delegated:

@anderssinho Wow! What in the world? How did you find that? Thank YOU! That is awesome! I would like to learn how to do that!

go in to sportstalksocial.com and wallet :)

@anderssinho Thanks!

Go here - https://sportstalksocial.com/@kingneptune/tranfers .

In the SPORTS power row , click eye symbol.

@amr008 done..... Do you have a way of checking to see your delegation? I just delegated to @amr008.sports.

Got it , received 5000 SPORTS.

Posted Using LeoFinance Beta

"The King" has struck again!

Oh @sports-gov is SPORTS community account , I have no link to that except that I am asking for delegation from that account to my sports curation account which is @amr008.sports .

If you want to check all your coins + delegations , the best place is https://leodex.io or https://hive-engine.com .

View or trade

BEER.Hey @amr008, here is a little bit of

BEERfrom @kingneptune for you. Enjoy it!Learn how to earn FREE BEER each day by staking your

BEER.I am impressed my dear MR geek, you are improving the quality of the Hive environment by your sterling efforts.

Thank you @julianhorack . I am just trying to do my part here :)

Posted Using LeoFinance Beta

What is a MR geek? I’m not familiar with the meaning of MR.

When the author finished his post above, he signed out with the name MR at the end. It appears to be his initials to his name, so I used it to address him personally.

Ahhhh thank you. I did not make that connection.

.

Thank you very much :)

Yes I did compare this with previous results and there was a massive difference , since the code alerts me with possible spammers and their previous ranks , I was surprised to see that many copy pasters had around 40-50 rank out of 200 people on LeoFinance and after the implementation all of their ranking has gone over 150 + if they somehow get to top 25 too by commenting say 10000 comments the code rejects their posts and doesn't upvote them.

Fuzzy Wuzzy is awesome , let me know if you need any help :)

Posted Using LeoFinance Beta

@amr008 Is there a penalty for upvoting without a comment? Things that make you go UMMMMMM........

I didn't get you . What do you mean by that?

Posted Using LeoFinance Beta

@amr008 If a person only upvotes a post and doesn't add a reply is there a "spamming" penalty? I would imagine that a reply would be more beneficial (engagement wise) than a "Solo" upvote? Does an upvote (solo) count towards engagement? If it doesn't count then the "engagement" factor is a wash. If there is a penalty "Spamming" then it would be better to add a comment vs. a "Solo" upvote.

Why am I asking this? Because sometimes I am online looking at content and the "brain" is tired. When I am in this "Brain Waxing" mode, all I accomplish is an upvote.

Lol no no. All those isn't taking into count.

Also I just saw the tip you sent. Thanks a lot .

Posted Using LeoFinance Beta

@amr008, hey you are welcome! You are doing some great things over here and I appreciate you effort!

Thanks buddy :) Do you watch sports ? lol I am trying to see who all are interested in SPORTS .

Posted Using LeoFinance Beta

Love these gamification improvements to the blockchain, they make interacting more fun :)

Posted Using LeoFinance Beta

Don't spam though lol .

Posted Using LeoFinance Beta

Great remedy against system abuse, any action has a reaction and is great you found the solution for it. Organic engagement is the one that should matter in the end.

Posted Using LeoFinance Beta

Thank you @behiver . I agree with the last point completely. We should reward organic Engagement.

Posted Using LeoFinance Beta

Great addition to the process. I agree that bots that post the same message multiple times should not be rewarded over the people who are providing unique comments.

Posted Using LeoFinance Beta

Totally. Although I check the frontend and make sure the comments are made from Leofinance or respective tribes, sometimes people copy paste and that increases number or comments .

Bots right now have frontend as "beem" for most of them so they are not Posing any problem too since I count comments made only from Leo , CTP , stem and sportstalk.

Posted Using LeoFinance Beta

I’m genuinely impressed. You put all this in prod very fast and rolling out updates/fixes at light speed too !

Not only you identified the breaches but you closed it in a blink.

Thank you for all your effort for the blockchain, you’re also responsible for its success !

Posted Using LeoFinance Beta

Good post (Am I winning? lol)

Thanks a lot @hykss. It really feels good when I get responses like this and this is the main reason and motivation for me to do more.

Posted Using LeoFinance Beta

Fella, you're putting some serious time into this! Truly appreciated! How are your delegations to each tribe going?

Thank you @nathen007.stem .

Delegations are huge on SPORTS and also today the proposal will be passed so another 10 million addition to sports account if everything goes smoothly.

Leo is something which has less amount of delegation for now but it's understandable.

Posted Using LeoFinance Beta

Thanks for all the hard work and nice job removing the duplicate comments. It's smart to use an API so you don't have to do the work for finding which ones are duplicates and not (easier on updates also).

As for the 60%, I would trust it for now but it's hard to decide an accurate sample size. Maybe over the course of the project and more data, this number can be changed to match what it really is.

Posted Using LeoFinance Beta

I replied to this comment 30 minutes ago itself :/ , you didn't get it?

Anyway I was saying yeah 60% is just what I came up with by checking few sentences manually . I will increase/decrease them based on how it goes in future.

Posted Using LeoFinance Beta

I was checking replies. It's not showing up in replies so I forgot about it. Technical errors I guess since replies arn't showing properly in LEO or CTP. I had to go to the thread to see the reply.

Let's see how well it goes with the current rate before deciding how to to proceed in the future.

Neat! I did not come across FuzzyWuzzy before. The fuzzy matching you described is a great solution for the problem.

What do you do with the spam alerts? Will you implement a block list?

I personally am against blacklist/ block list . It's because , say I posted 25 copy paste messages today but tomorrow I really want to engage genuinely with others ,I understand my mistake but then because I am in blacklist I won't be upvoted.

I prefer ignoring them and making sure they are ranked above 150 .

But I like to look at the users name being displayed by the code to keep a check on how well my code is working.

I hope you got my point.

Posted Using LeoFinance Beta

Sounds good to me, thanks for clarifying!

Do you ever sleep? lol It took me a half an hour just to read through and try to understand what you were telling me. I can't even imagine how long it took you to actually write it. Seriously, really great work here. I'm just trying to keep my head down and engaging. I'll leave all the math to you. :-)

Posted Using LeoFinance Beta

Ah sleep is for the weak lol just kidding I am heading to bed right now . It did take some time to write this but if you see the responses in the comment section , it is really worth it.

Posted Using LeoFinance Beta

My friend, I take advantage of this post to ask you what your commenting project consists of, since at Hive we have, together with @eddiespino, a project to rate comments while educating the community to encourage them to leave valuable comments on posts.

You can visit our blog and learn more about our project @elcomentador. I am interested to know how we could implement something similar at LeoFinance and if there is a possibility to work together.

Translated with www.DeepL.com/Translator (free version)

Posted Using LeoFinance Beta

Hello @garybilbao . I got some idea by looking at your blog , can you drop your discord id here so that I can reach you there ?

Posted Using LeoFinance Beta

Very well, I will write to you and we will have a better conversation, I think we can do something great.

Posted Using LeoFinance Beta

Absolutely . Let me know your discord if you want to talk over there.

Posted Using LeoFinance Beta

garybilbao (El Comentador)#0543

Nice. I need some fuzzy applied to the EL.

Let me know if you need any help ;)