Text-To-Image [VQGAN + CLIP] : Simple Experiments

Ever since OpenAI released the code for the CLIP model, I've been seeing an explosion in the generative arts. After reviewing the published research, I've been thinking about what can be generated using this model for about a while, and I've already started doing some experiments using various deep learning models.

CLIP is very efficient at telling if an image and a short piece of text belong together or not. But why do we want to know if they fit well together in the first place? The answer is that this task formulation is general enough to derivate many other tasks from it. For example, if you are familiar, this is like image recognition on ImageNet. The task there is to classify images. And this is what neural networks have been doing so far.

I don't want to take it too long. Shortly, CLIP allows to create all sorts of interesting visual art merely by inputting some text. If anyone is interested, they can go and check out the original article.

To get good results with Text-to-Image models, it's quite important to try a variety of text variations. By using CLIP with an image generator like VQGAN, the results can be incredible.

Let's generate some outputs

|  |

|  |

- Prompts: Abstract Impressionism

After a certain number of iterations, the outputs did not change much. It is clear that customizations need to be made on the prompt. However, it is necessary to consider that too many independent images on the data stack can produce complex results.

|  |

|  |

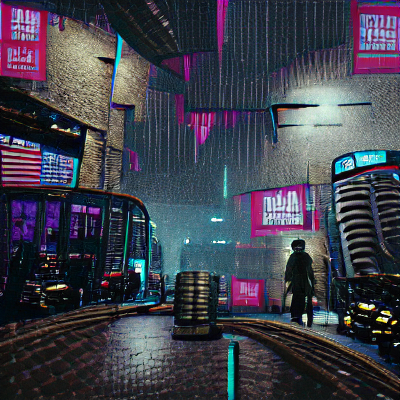

- Prompts: A rainy city street in the style of cyberpunk noir

Details about the environment in which the output was created can also give clearer results. This is actually an indication of how powerful the CLIP model is.

|  |

|  |

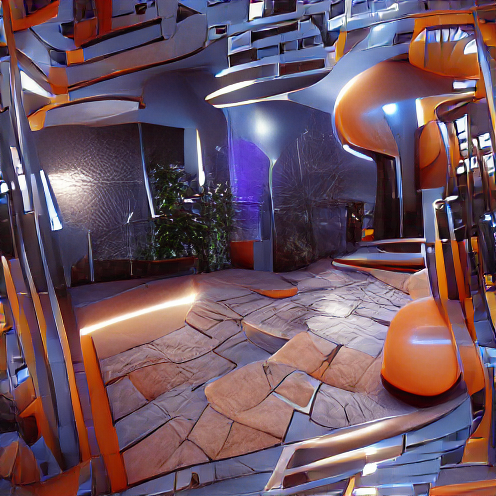

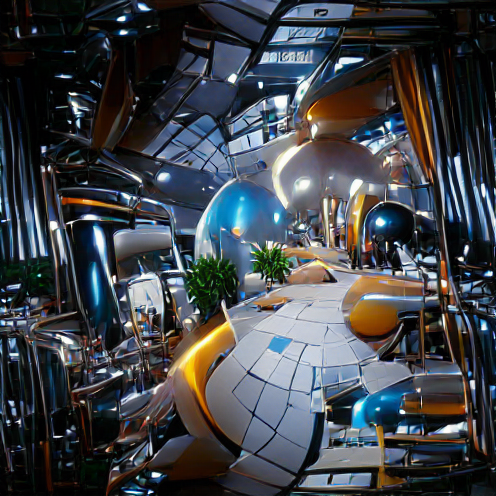

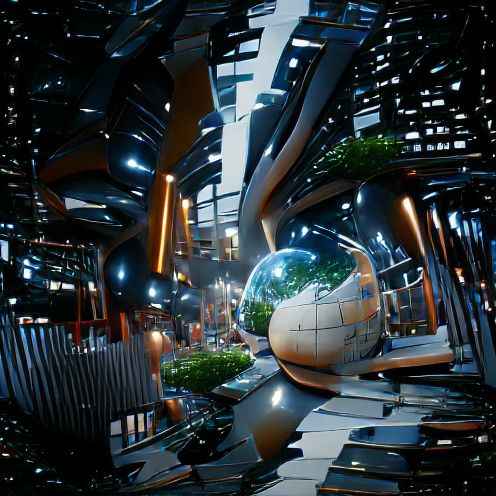

- Prompts: Futuristic World in Unreal Engine

As far as I understand, being vague is a great way to get variety. However, more effective results can be obtained by focusing on the concept that we are looking for and making prompt and parameter improvements around it.

There are a lot of shared Google Colab notebooks floating around for doing text to image with pre-trained GAN and diffusion models. You don't even need to know coding to do this simply.

I will to try to blog from time to time about the experimental work I've been working on for a few weeks.

Stay tuned