What happens to Cyber Security with Artificial Intelligence in the future

When hearing «Artificial Intelligence» we think of complex systems taking form as robots or evil human-machines. While some of those ideas may come to fruition at some point, what will happen between the battle of the good & bad, reality & technology?

But first of all,

What is Artificial Intelligence?

Essentially, AI is whatever technology we develop and use that would be just as intelligent as if a human was doing it. Even cleaning dishes! However, the definition itself is understood in many different ways. AI systems will typically demonstrate at least some of the following behaviors: Planning, learning, reasoning, problem-solving, knowledge representation, perception, motion, manipulation, social intelligence, and creativity.

Usually, when imagining AI we think of ominous robots to destroy the world. We’re talking human-like cyborgs. You’ve probably seen the Sci-Fi movie The Terminator which is a great example. However, AI doesn’t necessarily have to be considered «intelligent», «lifelike» or «murderous» to be what we think of it. Artificial Intelligence is a growing resource of interactive, autonomous, and self-learning which makes it suitable for many tasks that will help us in the future.

We shouldn’t think too highly of AI just yet as it is only in early development. There is a couple of emerging application from AI that isn’t too complex but still helps us during life such as flagging inappropriate content online, detecting wear and tear in elevators or simple things like responding to simple customer-service queries. But even if AI is capable of small things like this, you’d be amazed to see the systems that are made to do these tasks.

The possibilities of AI managing Cyber Security?

If we had some sort of AI Police Patrol that would roam around the internet and crawl hungrily for illegal content or internet trolls, most of the cyber attacks that we deal with today would be minimized to the bare minimum. Not to mention how many homemade hackers that sit in their chair all day would probably go offline. Anyway, back to the point, a cyber security-based AI would be a golden era for security developers and help with a lot of Pentesting or other professional security tasks needed to be done. Think of the money savings!

But let’s talk theory. At a very simple level of speaking, AI and machine learning enable a system to understand the normal behaviors of an individual, a happening or a website for instance. The system takes some time to understand and observe the environment to create its own «base and ground» of how the reality is. Think of it as yourself when you were a child and were still developing your own rules, norms, and behavior. We’re basically “raising an AI child”.

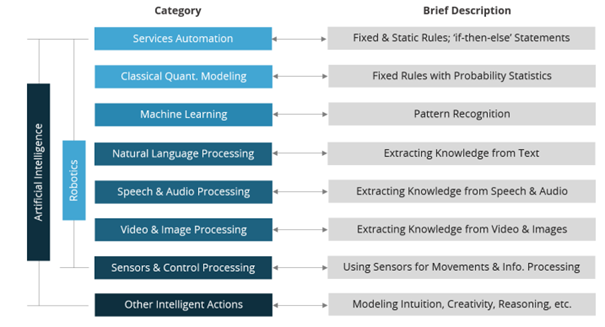

Below you see different categories of what the AI needs to learn, depending on which type of AI it is and what it is set to do, and a description of it.

The robotics are essentially the foundation of our “child AI” where we give it the information it needs and program it with any input. Things like recognizing a picture, figuring out who’s talking, getting information from sensors and such. Then we have the last part which fully completes our “child AI” and it’s ready to take a GED to become an “adult AI”: Other intelligent actions. This is the part where we give out AI the skill to use all of this input and information to reason, react and other “output” actions like we talked about earlier in the introduction.

Just for simplicity, let’s now call our adult AI for Adai. Adai has been taught how to use Natural Language Processing (Extracting knowledge from text) and his purpose is to spot specific commands from top 100 tools used for cyber-attacks or generally black hat activity. In addition, it also knows how to use NLP together with Video & Image Processing to spot tutorials and guides that are tied with the same topic. Adai now has the ability to check if black hat activity, such spotting specific commands running while checking if that same skid is looking at some Hack The Box tutorial online. Our beloved Adai could easily prevent an actual attack from happening and use a reasoning action to either act or send a warning.

Or we can imagine John Smith that lives in his girlfriend’s apartment in London. He’s logging into Facebook, Instagram, LinkedIn, and many other platforms. Adai could use statistics on where he usually logs in and could prevent situations from happening by temporarily change John’s password if a login has happened at an odd place. Adai then uses Machine learning to add information of where John usually logs in and at which geographical area, and prevent identity theft or other attacks from happening.

Or even deny someone access to their bank because their face doesn’t go accordingly to the owner of the account.

What to expect

Machine learning and AI can help guard against cyber-attacks. By the help of a diverse set of algorithms with different data sets and features can help mitigate and monitor these threats. I personally believe that AI will go a long way with a lot of success, and people should not need to feel threatened by the capabilities of “AI taking over”. This is because AI only is being trained in specific areas that they’re needed, and can’t be used in other areas outside of that. However, with the right training and accurate information, I think most of our problems will be solved within the fight between the red and the blue. We can always improve and fix situations, but sadly we can also expect other AIs to fight against the ones we already made.

It's overall a big question we can’t respond to until we see where technology takes us.

There are types of artificial intelligence applications that can and most likely will be used for cybersecurity solutions that we have these days. These are not necessarily based off of human intelligence, but rather specific mechanisms that would imitate the same type of thinking, such as:

• Spam Filter Applications

• Network Intrusion Detection and Prevention

• Fraud detection

• Credit scoring and next-best offers

• Botnet Detection

• Secure User Authentication

• Cybersecurity Ratings

• Hacking Incident Forecasting

• Malware Analysis

As smart and intelligent as AI can be, there will never be a 100% guaranteed success rate. Researchers have found that it varies between 85% and 99% in most cases. But in the last few years, some products have been improved to detect Cyber-attacks with the help of AI like DarkTrace. AI can reduce the workload for cybersecurity analysts by helping to prioritize the risk areas for attention and automating the manual tasks they typically perform- things like searching through log files for signs of compromises). AI increases speed, resilience, opportunities, and chances of success. And because AI algorithms are self-learning, they get smarter with each attempt and failure since their endeavors are continuously better informed and more capable.

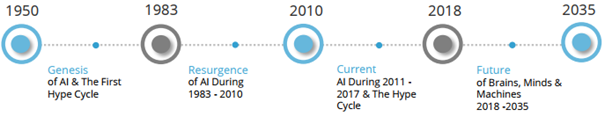

Picture 1 source: https://pngimage.net/artificial-intelligence-png-4/

Picture 2 source: https://www.kdnuggets.com/2018/02/birth-ai-first-hype-cycle.html