Artificial Intelligence and Music – What the Future Holds?

What is Artificial Intelligence?

Simply speaking, Artificial intelligence (AI) is the ability of a computer program or a machine to think and learn in a way similar to human beings. It is capable of simulating human intelligence to a certain degree and can perform different tasks and solve problems.

The term “artificial intelligence” was first adopted in 1956, during the Dartmouth Conference in the USA, but AI technologies and applications has become significantly more popular and capable nowadays thanks to increased data volumes, advanced algorithms, and improvements in computing power and storage.

Research in Artificial intelligence is known to have already impacted and affected many major industries, and the Music industry is certainly one of those.

Before attempting to predict future in the complex field of AI music let us first examine the past and try to understand the present.

A Brief History of Artificial Intelligence Music Creation

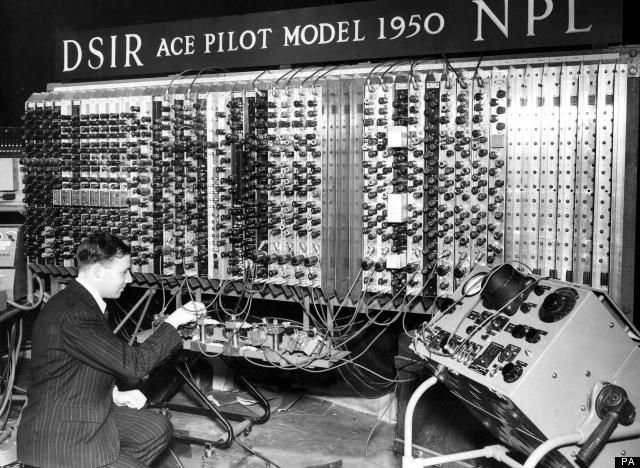

1# In 1951, British mathematician Alan Turing

for the first time in the history created a computer generated music.

Alan Turing was a computer scientist, philosopher and cryptologist who played a crucial role in breaking the Nazis’ Enigma code.

Alan Turing with first NPL computer

The recording was made 69 years ago by a BBC outside-broadcast unit at the Computing Machine Laboratory in Manchester, England.

The machine, which was used to generate melodies filled much of the lab’s ground floor, and was built by A.Turing himself. Several melodies were created, including — “God Save the King” and “Baa, Baa Black Sheep”.

While Turing programmed the first musical notes into a computer, he had little interest in stringing them together into tunes, so that work was carried out by a school teacher named Christopher Strachey.

Here are some fragments of the famous restored recordings.

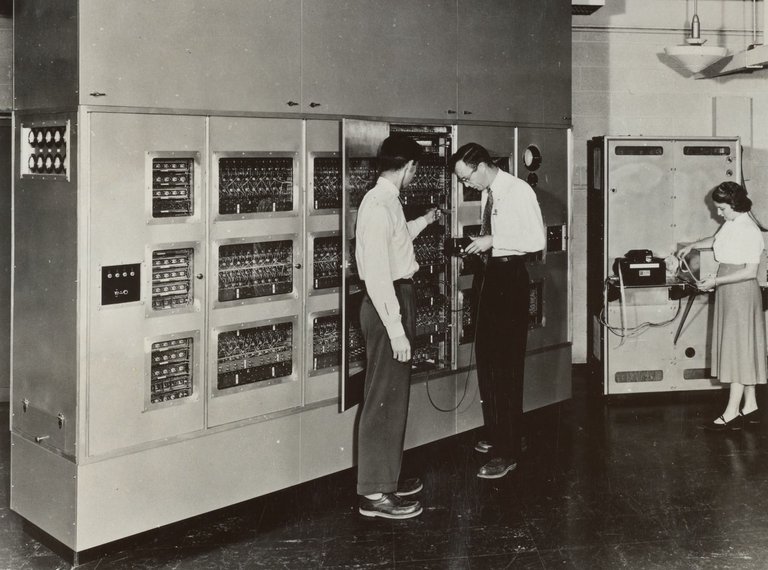

2# In 1957, Composer Lejaren Hiller and mathematician Leonard Isaacson from the University of Illinois at Urbana–Champaign programmed the ILLIAC I (Illinois Automatic Computer), the first computer built and owned entirely by a United States educational institution, to *generate *compositional material and create the musical work completely written by Artificial intelligence — “Illiac Suite for String Quartet”.

Photo of ILLIAC I, ca. 1950s

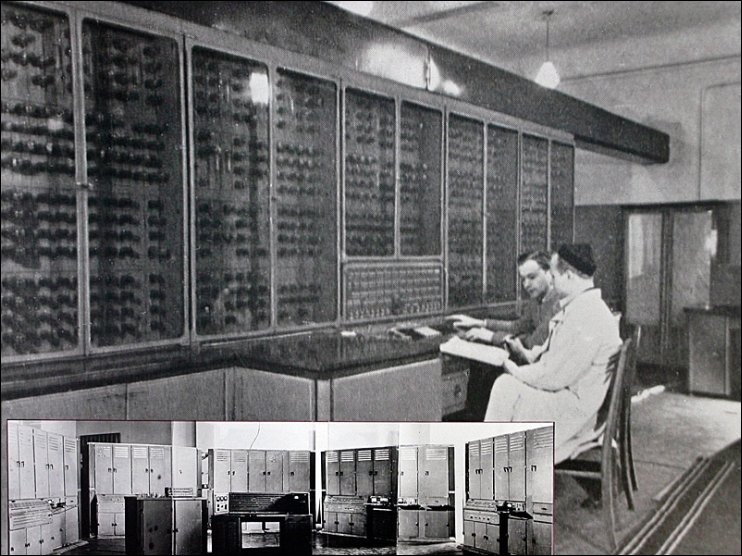

3# In 1960, Russian researcher R.Kh.Zaripov published the first paper worldwide on algorithmic music composing using the URAL-1 computer — “An algorithmic description of a process of musical composition”.

URAL-1 Historic Russian Computer

4# In 1965, an American Inventor Ray Kurzweil premiered a piano piece created by a computer that was capable of pattern recognition in various musical compositions. The computer was then able to analyze and use these patterns to create different novel melodies. It was debuted on Steve Allen’s I’ve Got a Secret program, and stumped the hosts until film star Henry Morgan guessed Ray’s secret.

5# In 1973, MIT Experimental Music Studio (EMS) was founded by Professor Barry Vercoe and became the first facility in the world to have digital computers dedicated to full time research and making of computer music. EMS developed and researched many important computer music technologies: real-time digital synthesis, live keyboard input, graphical score editing, programming languages for music composition and synthesis.

6# In 1974, the first International Computer Music Conference was held in Michigan State University, East Lansing, USA. The International Computer Music Conference (ICMC) is a yearly international conference for computer music researchers and composers. Later on it became an annual event organized by the International Computer Music Association (ICMA).

7# In 1975, Researcher N. Rowe from the MIT Experimental Music Studio published a paper “Machine perception of musical rhythm” and developed a system for intelligent music perception that enables a musician to play fluently on an acoustic keyboard while the machine infers and registers a meter, its tempo, and note duration.

8# In 1980, American composer and scientist David Cope from the University of California, Santa Cruz developed system — Experiments in Musical Intelligence (EMI). The EMI was based on generative models to analyze existing music and create new pieces based on them.

EMI was a major breakthrough. Through analyzing different music works, system could generate unique structured musical compositions within the framework of specific music genre. EMI has created more than thousand different works based on the works of more than 30 composers who represented different musical styles.

Here’s an example:

9# In 1988, The Sony Computer Science Laboratories (Sony CSL) were founded for the sole purpose of conducting research relating to computer science and later on it was considered to be one of the milestones in Artificial Intelligence music research field.

10# in 1995, Musician David Bowie helped programmer Ty Roberts to develop an app called the “Verbasizer”, which took literary source material and randomly reordered the words to create new combinations that could be used as lyrics. The results appeared on several David Bowie albums later on.

11# In 1997, The Music research team project at Sony Computer Science Laboratory Paris was founded by French composer and scientist François Pachet. He started a research activity on music and artificial intelligence. His team has authored and pioneered many different technologies (more than 30 patents) about electronic music distribution, audio feature extraction and music interaction.

12# in 2002, The new algorithm called “Continuator” was designed by researcher François Pachet in Sony Computer Science Laboratory. Continuator was a completely new invention, it could learn and interactively play with musicians who were performing live. More specifically, Continuator could continue to compose a piece of music from the place where the live-musician stopped.

13# In 2009, Computer program called Emily Howell created the whole music album titled — “From Darkness, Light”. Program was created by David Cope, a music professor at the University of California, Santa Cruz. Emily Howell is an interactive interface that registers and takes into account feedback from listeners, and builds its own musical compositions from a source database. Cope attempts to teach the program by providing feedback so that it can cultivate its own more personal style. The software is based on latent semantic analysis.

14# In 2010, Musical composition 1st fragment called “Iamus’ Opus one” is created. It is the first fragment of professional contemporary classical music ever composed by a computer AI in its own unique style.

Iamus is a computer cluster, it is located at Universidad de Malaga. Powered by Melomics’ technology, the composing module of Iamus takes about 8 minutes to create a full composition in different musical formats. Here you can listen to complete Iamus computer composition titled “Hello World!”

15# In 2012, Computer program called Emily Howell creates her second music album titled “Breathless”.

16# In 2016, Scientists from the Sony Computer Science Laboratories unveiled a new AI generated track called — “Daddy’s Car”.

Researchers at Sony have been working on AI-generated music for years and has previously succeeded to create some pretty impressive jazz tracks using AI. But this was the first time the Sony CSL Research Laboratory had released pop music composed by AI, and the results were quite impressive.

To write the song, the AI software called “Flow Machines” drew from a massive database of songs to compose new music, combining small elements of many tracks to create new compositions, it was generated mostly based on The Beatles original pieces, but the further arrangement was done by live-musicians.

17# **In 2016, **Computer system **IBM Watson **in collaboration with musician and producer *Alex Da Kid writes a sad emo song called — “Not Easy”.

The IBM Watson supercomputer was used to create the song. To do it the computer analyzed a huge number of articles, blogs and data taken from social media in order to formulate the most featured topics at the time and characterized overall emotional mood.

It combines artificial intelligence and sophisticated analytical software for optimal performance as a “question answering” machine. The supercomputer is named for IBM’s founder, Thomas J. Watson.

Surprisingly, the song took the first place in the Top 40 charts of Billboard Charts.

18# In 2017, American Idol star Taryn Southern released her new album called “I AM AI” which is composed entirely by an Artificial intelligence.

To do it musician used AI music composer tool developed by company Amper Music.

Simply speaking, Amper software by using internal algorithms is capable of producing sets of melodies in accordance with a given music genre and mood.

Amper created the basic music structures, the rest including lyrics was the work of Taryn Southern.

The first produced song was titled — “Break Free”.

19# In 2019, Company called Dadabots set up a non-stop 24/7 YouTube live stream playing heavy death metal music generated completely by AI algorithms.

It is worth mentioning that company behind this project has produced several AI music albums before this live stream even appeared.

It’s the work of music researchers and technologists CJ Carr and Zack Zukowski, who have been experimenting for years on how to get artificial intelligence to produce recognizable music in different music genres. According to them, the algorithm creates a pretty decent music for metal music genre and it doesn’t even require any corrections, so they decided to let AI try to compose tracks live on YouTube.

To train neural networks by using machine learning developers used the songs of the Canadian metal band “Archspire”, which are notable for their fast and aggressive tempo. As a result, the algorithm learned to impose speed drums, guitar, and vocals so that the result sounded like real death metal music.

20# In 2019, Icelandic singer and songwriter Björk has collaborated with Microsoft to create AI-generated music called “Kórsafn” based on the changing weather patterns and position of the sun.

Composition is generated and played continuously in the lobby of “Sister city”, a hotel in New York’s lower east side that opened in the spring of 2019.

Kórsafn uses sounds from Björk’s musical archives, which she has compiled over the last 17 years, to create new arrangements. Using Microsoft’s AI, the music is adapting to sunrises, sunsets, and changes in barometric pressure, using a camera live feed from the hotel building roof. The result is an endless string of new variations that creates weather associated mood for hotel guests. In addition, it is intended to train Microsoft’s AI to better recognize dense and fluffy clouds, snow, rain, clear sky and birds in different lighting and seasons.

AI Music Today — Where Are We Now?

Although it’s been over 60 years since the experiments with algorithmic AI music composition began, overall it is still considered to be the very early days of this technology by many music experts and researchers.

Nevertheless, Artificial intelligence is already reshaping the music industry and business globally by covering many significant areas of the field. AI is undoubtedly shifting the way businesses think about work, day-to-day operations and business models in general.

Today there’s an entire fast growing new industry built around different AI services offered worldwide for creating, processing and analyzing music. Here are only some of many different services and applications:

- Flow Machines by Sony CSL. Funded by the European Research Council and coordinated by François Pachet. Flow Machines is a research and deployment project aimed at achieving augmented creativity of artist in music. The goal of Flow Machines is to research and develop artificial intelligence systems that are able to generate music autonomously or in collaboration with human artists. The research firm states that they turn music style, which can come from individual composers, into a computational object, which can be read, replicated, processed and worked on by AI. Here’s an example:

- Amper Music. Amper Music is a cloud-based platform designed to simplify the process of creating soundtracks for movies and video games, as it produces AI generated algorithms that help users create music in a variety of music genres. Amper service provides an AI music tool that performs, composes and produces custom music for media content. The web application enables creators to choose composition style, mood and length, crafting it to fit their content with no additional musical knowledge or skills needed. Here’s an example:

- Google Magenta. Google is one of the leaders in the AI and Machine Learning field. Magenta is a Google started research project exploring the role of machine learning in the process of creating art and music. Magenta was started by researchers and engineers from the Google Brain team, but many others have contributed significantly to the project. Within the framework of this new deep learning and reinforcement learning algorithms has been developed, for generating songs, images, drawings, and other materials. But it’s also an exploration in building smart tools and interfaces that allow artists and musicians to extend their processes using these models. It uses TensorFlow and releases new models and tools in open source on GitHub. Here’s an example:

- Aiva. Company called AIVA Technologies is the creator of a soundtrack-producing artificial intelligence music engine platform. It enables composers and creators to make originals or upload their work to create new variations. AIVA Technologies team works on developing an AI script that can compose emotional soundtracks for ads, video games or movies. Besides enabling its users to create music from scratch, AIVA can also be used to produce variations of existing songs. The music engine that powers this AI music composer makes the production of corporate or social media videos much easier, as it eliminates the need to go through the music licensing process. Here’s an example:

- IBM Watson Beat. The Watson Beat is IBM’s project that uses mathematically creative ways of imitating the rules of music theory and applying them to compose rhythms and melodies. It’s open source code is available on GitHub and it employs two methods of machine learning to assemble its compositions: reinforcement learning that using the tenets of modern western music theory to create reward functions, and a Deep Belief Network (DBN) trained on a simple input melody to create a rich complex melody layer. Here’s an example:

- Melodrive. It is a real-time adaptive music generator for interactive media. With uses like interactive experiences, video games and music branding, the generator creates music that adapts to its media environment, ramping up, slowing down and transitioning through emotions accordingly to give users the best possible experience. Melodrive is one of the first Artificial intelligence systems that can compose emotional and unique music in real time. The AI generates music by adapting to the media environment and aims to match the mood and overall style of the video.

- Brain.FM. It is a web and mobile application that provides atmospheric music to encourage rest, relaxation and focus. Created by a team of engineers, entrepreneurs, musicians and scientists, the company’s music engine uses AI to arrange musical compositions and add acoustic features that enable listeners to enter certain mental states during a 15 minute sessions. The idea is that music can make our brains more productive. Application uses artificial intelligence to generate music designed to make your brain more productive and focused. This platform is suited for people who spend a lot of time at work and have a tough time maintaining their focus while performing important tasks.

Spotify. It is very popular music streaming service whose listening library includes millions of songs, albums and podcasts. The music provider offers intelligent playlists called “Discover Weekly”, which presents music users haven’t yet heard on the Spotify platform and is customized to their listening habits and to achieve that service uses AI and machine learning. Spotify uses a method called collaborative filtering to collate as much data as possible from a user’s listening behaviour. It then does a comparative analysis with thousands of other data that it has collected from other users across the globe. This data is then used to improve recommendations and suggest new music for listeners to enjoy.

ORB Composer. It is a creative music composition software solution that will help you to get inspired and create ideas and songs faster. To use this software tool, you need to know at least some basic rules of music composition in order to be able to use the ORB Composer properly. The AI music composer comes bundled with a rich collection of chord progressions, that contains many chords used in popular music. This tool is aimed at artists who are interested in experimenting with the possibilities of creating music with AI and discovering new styles of music. You can start creating compositions by simply organizing different blocks of music. Here’s an example:

Shazam. It is one of the first consumer-used AI services and now (since 2018) it is officially part of Apple. Shazam uses intelligent technology to hear and identify songs in just a few seconds. It works by taking a “digital fingerprint” of a song, matching it to a massive library of previously fingerprinted music and presenting the matched song to users. Suppose you are at some public event and you like the music that is playing, and you are too embarrassed to either ask your friend or the barkeep to tell you the song, to you can start the Shazam app and tap the button. A digital fingerprint of the playing audio will be created and, within seconds, matched against a database of millions of tracks and TV shows. Using its AI algorithm, the app gives you the name of the track, the artist, and information such as lyrics, video, artist biography, concert tickets and recommended tracks. It also lets users purchase or listen to the song using its partner services. The company already has a repository of millions of tracks.

MuseNet. It is deep neural network that can generate 4-minute musical compositions with 10 different instruments, and can combine styles from country to Mozart to the Beatles. MeseNet is created by Artificial intelligence research laboratory called OpenAI (based in San Francisco, California). You can give it a short segment of music to get it started or have it start from scratch. The researchers behind this project say that the system is able to pay attention to played music over long periods of time and later is capable to understand the broad context of a song’s melodies. With this accumulated data, the system is tasked with predicting the next future note in a sequence and that is idea behind music generating process. Here’s an example:

- AWS DeepComposer. It is the world’s first musical keyboard combined with a generative AI service created by Amazon (should be available to purchase for $99 in Q1 2020). AWS DeepComposer is a 32-key, 2-octave keyboard designed for developers to get hands on with AI generated music. The keyboard is supposed to help developers learn about machine learning and create melodies that are transformable into completely original songs in a matter of seconds, all powered by AI and without having to write a single line of code. Here’s an announcement video and short preview:

AI Music — What the Future Holds?

Progress in Artificial intelligence music field has rapidly accelerated in the past few years and the development of music technology has tended towards notable changes. AI is scoping a promising future for music technology and it will inevitably transform the music industry.

Like many revolutionary technologies, AI generates fears of disruption as its influence is likely to spread significantly and change the way we experience music over the coming years. It is therefore understandable that for independent composers and producers, who work creating original music, a technology that can reproduce their work that is comparable in quality, in less time for less money is a very frightening perspective.

It is expected that the jobs of music producers and songwriters will be augmented as AI continues to integrate itself inside music’s creative sphere. According to a new report from the World Economic Forum, AI machines and algorithms are expected to create 133 million new roles and cause 75 million related jobs to be displaced by 2022. According to a McKinsey report 70% of companies will have adopted at least one AI technology by 2030. This information may give a glimpse into the music industry of the nearest future.

Even though similar innovations have been disruptive in their early stages, eventually they are incorporated as new tools that complement and improve our human capabilities. When seeing AI as an extension to our creative process we can predict that industry will continue to evolve and will soon become a collaborative environment where man and machine can work together to make music. Artificial intelligence is changing the way artists think about music creation, forecasting advanced opportunities where creative musicians could have to work alongside machines creatively. Many composers that already working with AI hope that the technology will become a democratizing force and an essential part of everyday musical creation. Furthermore, many believe that it could make music composition more accessible for a wider audience and help forming a completely new music industry culture.

As AI is pushing music forward by driving growth and innovation the technology’s impact on the music industry as a whole remains to be seen, however there is one very essential factor that we need to acknowledge and that is — Personalization.

Customers crave personalization because every customer is a unique individual and every customer wants an amazing experience. The point is that amazing experience means different things to different people.

The problem is that personalization can be an overwhelming and very complicated task as scaling out personalization takes much time and effort. Artificial intelligence is the obvious solution for this problem.

AI has the power to achieve mass personalization by harnessing huge amounts of data from multiple different data sources and uncovering patterns in customer behaviour and personality. AI uses interaction history to develop a specific profile of each customer, allowing them to deliver high levels of personalization in customer engagement, services and products. With machine learning, companies can introduce as many product variations as they choose to personalize customer experiences and instead of being restricted by the variables and traits that you consider important, the machine can use those that it’s learned and make a difference in what resonates with individual customer. This enables much more complex personalization powered by machine learning algorithms and applications.

AI the most exciting technology that brings new opportunities and possibilities to consumers and companies. Artificial intelligence provides the unprecedented opportunity for music listeners to become co-creators and get involved in a whole new level on a massive scale. This means that in the future music will be able to adapt perfectly to a listener and listener no longer have to adapt to music.

Some innovative companies are already using Artificial intelligence to drive personalized music service and product development, for example, there is an AI music company called MUSICinYOU.ai that offers creating personalized, completely unique AI-generated musical composition based on your individual personality traits and characteristics. First you must register and take specifically designed 300 question Personality test and AI takes care of the rest. Although it is a small scale project of the newly established company they have managed to realize a quite fascinating idea that producing some very interesting results (you can listen to some examples after registering).

I believe that personalized AI music will be the prime driver of AI development and marketing success in the nearest future. As Artificial intelligence takes baby steps towards an exciting new future we can only imagine the upcoming possibilities and applications.

Here are 2 possible future applications or imaginary scenarios for you to consider:

Online service that offers creating completely personalized and individual music album that you can buy. After you make a purchase AI searches for all available public information about you in the internet, analyzes it, determines what kind of music you would like and then generates it for your satisfaction. Thus bringing inspirational creativity straight to customer on a very personal level like never before.

You buy ticket to upcoming AI music concert online, and to do this you connect to ticket service using social network profile. Concert repertoire and program remains to be unknown at the time of ticket buying. Sometime before concert ticket sale closes and AI then quickly and effectively analyses all public social profile information for all potential visitors who have bought a ticket. Then it divides all visitors to several interest groups and generates most appropriate music for each group and perform on separate stages to achieve better satisfaction for all visitors. Thus offering you a truly unprecedented experience and encouraging social connections & taking community building to a whole new level.

Thank you for reading and please share your thoughts and ideas on the subject in the comments section!

Congratulations @jeremy.freeman! You have completed the following achievement on the Steem blockchain and have been rewarded with new badge(s) :

You can view your badges on your Steem Board and compare to others on the Steem Ranking

If you no longer want to receive notifications, reply to this comment with the word

STOPVote for @Steemitboard as a witness to get one more award and increased upvotes!