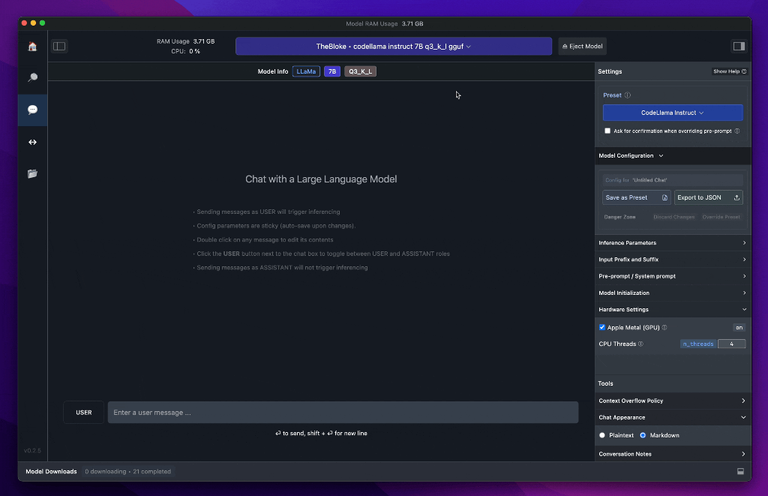

A Child Could Run A Private Local LLM The process is less complicated than using ...

... Zoom:

- Install https://lmstudio.ai or similar app

- Select a model and download

The real bottleneck is hardware. I could only use upto 13B models. Results were worse than GPT 3.5 and slow.

Posted via D.Buzz

0

0

0.000

Even the process of fine tuning AI has become extremely easy from where it used to be. I would not say a child could pickup the process. If you have some experience working on the terminal and have basic familiarity about AI, there are solutions already setup by experts.

Posted via D.Buzz

Ran out of RAM? Use swap files. 😂

Posted via D.Buzz

Just checked the screenshot, I'll take back my last comment.

He should you swap files.

Posted via D.Buzz

I gave up on local LLMs after few hours of playing around. I will have to wait till I get a better laptop. Until then I would stick with Brave's Leo or ChatGPT.

A child will ave the world...

Thanks for this!

Posted via D.Buzz

Ya when you say child, you mean an ai... like the agi with the mind of a child!

Posted via D.Buzz