OpenAI Releases GPT-4 Omni Chatbot for Combined Text, Audio and Video Input/Output

KEY FACT: OpenAI has released the latest model of its chatbot named "GPT-4 Omni" for combined text, audio, and video input and real-time output. The new version of ChapGPT provides for a more natural form of human-computer interaction

GPT-4 Omni. Source: openai

OpenAI’s GPT-4 Omni Chatbot can Reason across Text, Audio and Video in real-time

OpenAI has made an essential upgrade in its Chatbot identified as GPT-4o (“o” for “omni”). This new flagship model can reason across audio, vision, and text in real time, as well as can speak more convincingly like a human. This means that it can essentially let users livestream with ChatGPT. This upgrade was announced on Monday, May 13.

According to the press release, GPT-4o is a step towards much more natural human-computer interaction as it accepts as input any combination of text, audio, and image and generates any combination of text, audio, and image outputs. This is a significant breakthrough in the generative AI industry.

A series of demos released by the Open AI shows that GPT-4 Omni can help users with things like interview preparation — by making sure they look presentable for the interview — as well as calling a customer service agent to get a replacement iPhone.

GPT-4o is built high precision to respond to audio inputs in as little as 232 milliseconds, with an average of 320 milliseconds, which is similar to human response time (opens in a new window) in a conversation.

It matches GPT-4 Turbo performance on text in English and code, with significant improvement on text in non-English languages, while also being much faster and 50% cheaper in the API. GPT-4o is especially better at vision and audio understanding compared to existing models - source.

OpenAI said in a recent X post that the text and image-only input version was launched on May 13, with the full version set to roll out in the coming weeks.

Say hello to GPT-4o, our new flagship model which can reason across audio, vision, and text in real time: http://openai.com/index/hello-gpt-4o/

Text and image input rolling out today in API and ChatGPT with voice and video in the coming weeks. - source

Also, Greg Brockman, President and Co-Founder of OpenAI made an X post stating that the new version of ChapGPT provides for a more natural form of human-computer interaction. In a related blog post, he expressed his surprise at the uniqueness of the updated AI chatbot.

Introducing GPT-4o, our new model which can reason across text, audio, and video in real time.

It's extremely versatile, fun to play with, and is a step towards a much more natural form of human-computer interaction (and even human-computer-computer interaction): - source

Exploring the capabilities of the new chatbot, samples of input and their respective outputs were made and screenshot shared in the press release. See below;

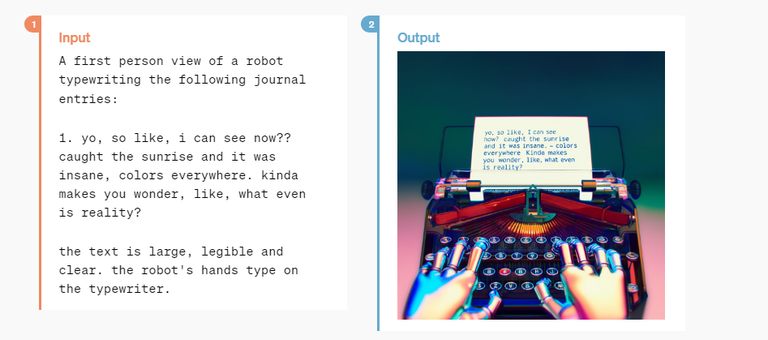

Sample Input/Output 1

A first person view of a robot typewriting the following journal entries:

1. yo, so like, i can see now?? caught the sunrise and it was insane, colors everywhere. kinda makes you wonder, like, what even is reality?

the text is large, legible and clear. the robot's hands type on the typewriter.

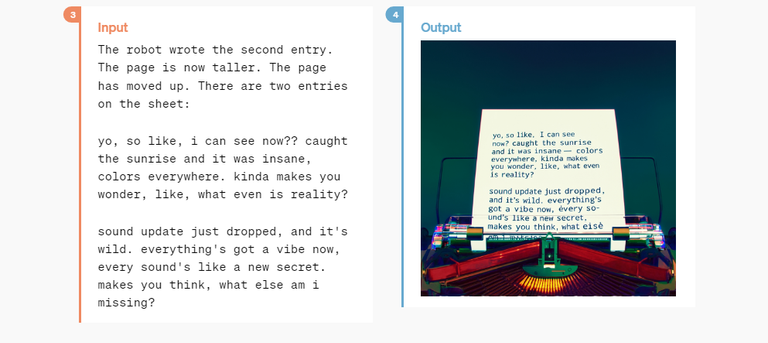

Sample Input/Output 2

The robot wrote the second entry. The page is now taller. The page has moved up. There are two entries on the sheet:

yo, so like, i can see now?? caught the sunrise and it was insane, colors everywhere. kinda makes you wonder, like, what even is reality?

sound update just dropped, and it's wild. everything's got a vibe now, every sound's like a new secret. makes you think, what else am i missing?

It is also revealed that GPT-4o will be available to both paid and free ChatGPT users and will be accessible from ChatGPT’s API. This is another landmark development in the AI world. We hope that other major players in the sector will create their own version too.

What are your thoughts on the future of this development and where exactly are we headed?

If you found the article interesting or helpful, please hit the upvote button, and share for visibility to other hive friends to see. More importantly, drop a comment below. Thank you!

This post was created via INLEO, What is INLEO?

INLEO's mission is to build a sustainable creator economy that is centered around digital ownership, tokenization, and communities. It's Built on Hive, with linkages to BSC, ETH, and Polygon blockchains. The flagship application: Inleo.io allows users and creators to engage & share micro and long-form content on the Hive blockchain while earning cryptocurrency rewards.

Let's Connect

Hive: inleo.io/profile/uyobong/blog

Twitter: https://twitter.com/Uyobong3

Discord: uyobong#5966

Posted Using InLeo Alpha