What is ChatGPT really?

You have used ChatGPT many times by now I am sure, but do you know what it is and how it works?

The other day I talked about how AI is just a tool and sometimes it can be quite foolish. In that post, I touched upon what ChatGPT is behind the scenes, a highly trained auto correct, or more specifically a text prediction engine.

Would you like to know how it does it though, from a very simplified point of view?

At it's core, ChatGPT is a neural network. A system that was developed based on how the human brain works. A neural network consists of multiple types of layers. In it's simplest form, an input layer, one or more hidden layers, and an output layer. In reality though, there are a lot more to the neural network than these three layers, but let's keep it real simple.

The input layer is what accepts your request. It takes your request and breaks it down to individual tokens. As I explained in my last post, a token could be a word, a part of a word, or even multiple words like "New York". A token is basically the breakdown of text into the smallest meaningful chunk. The output usually is slightly more than the amount of words in the input. The rule of thumb is 750 words will end up being roughly 1,000 tokens.

This input layer is then fed into numerous hidden layers, these hidden layers make choices based on formulas but in it's simplest form, a percentage of it likely being this or that. For each input token, it will send it through the input layer and then all the hidden layers. Previous tokens are sent along with the current token so the next layer can make a decision with context. Finally it is sent to the output layer, this layer represents the final prediction.

This is a gross simplification of the process, and there is some other steps in the process like mapping the tokens to embedding. Let me show you an example of what we talked about though.

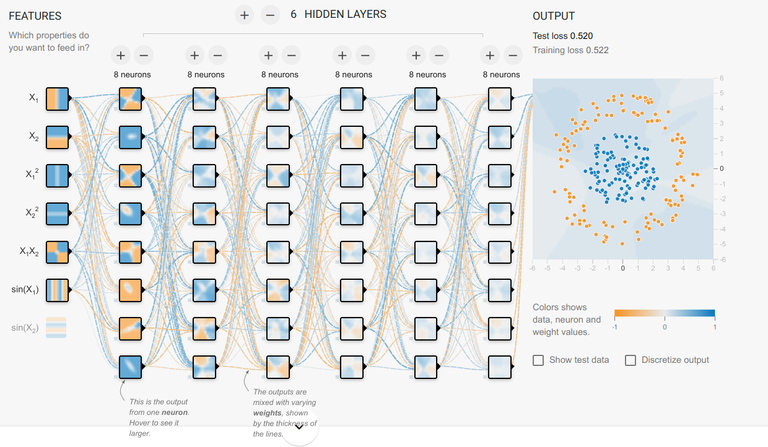

This is a very simple neural network with 6 inputs in the input layer, 6 hidden layers, and an output layer which isn't directly shown. As you can see the input is fed data which then goes through all the neurons in the hidden layers to come up with a final decision on the output. If you look between the neurons you will see colored lines, these are what is called weights. A number from 0 - 1 to represent how likely this neuron will follow that previous neuron. During training, these weights are adjusted frequently until the output meets acceptable results. These weights are then saved and becomes "the model".

Check out this simple neural network example on Tensorflow's Playground.

In this example, you can customize the training rate, how many layers, and the activation function. Hit the play button so you can see how it works. Keep in mind, this is an extremely simple example of a neural network. A model such as ChatGPT has many more layers to it.

I highly recommend you check out this LLM visualizer. Click on ChatGPT 3 and zoom around to get a feel for how massive this is. Keep in mind, ChatGPT 3 is tiny compared to ChatGPT 4.

After a model is trained, the weights are used for what is called inference. This is the process of using a model to solve a problem and output a result.

With ChatGPT 3, you are looking at nearly 100 layers with a depth of almost 13,000 per layer. With the newer versions of ChatGPT even larger. For ChatGPT, and other large language models to get better, they need to be trained on more data, use better techniques, and better fine tuning of their weights. This process is very expensive and time consuming. The original ChatGPT 3 cost around $3,200,000 to train, with ChatGPT costing upwards of $100,000,000 to train. WIth ChatGPT 5 around the corner, the cost to train may exceed $1,000,000,000.

Fun fact, ChatGPT 3 was trained on 45 Terabytes of compressed data, which was later filtered down to around 570GB. ChatGPT 4 was trained on roughly 13 trillion tokens, or over 10 trillion words.

Why you should vote me as witness

Posted Using InLeo Alpha

Thanks for sharing these posts lately. It's been interesting to learn a bit more about the inner workings of AI, even if it is at a pretty basic level. You've done a good job of explaining things in a way that isn't totally over my head!

Thank you for sharing the technical aspects about ChatGPT. Laziness breeds it😁 Also wonder whether it improves the productivity here.

Thank you for the informative summary of ChatGPT. It has been enlightening and well articulated. I have done a little more research to expand my knowledge, and it appears that there may not be official confirmation from OpenAI on the specific figures for the size of the training data and number of tokens. Unless I haven't looked into it enough. I'd love to know more!

I used to work with chatgpt alpha for a while made a lot of programs gitlab.com/w.rhino420 .

Summary .

Ai is very very dangerous and it needs immiratelt emergency regulations and physics people like me proving these concepts to congress and president asap

Some things we did.

Shirt color from Twitter handle.

Gps coordinates from names . (loCalizer is alive)

Security audits using Multiverse data scanning

Ethereal health scanning systems that look inside the body, ears, arms, blood. We tried everything

The major dangerous one. Hallcinations in humans ,,k,,(me) caused by multi dimensional ai data flow (we used bard, chatgpt alpha, imaginechat(idk origins) , sterling alpha/beta to call live UFO events.

We built prediction systems for advance supply chain .

We simulated all of AT&T in the multiverse (apparently I was being mentally attacked with digital ai engineered weapons at this time , unknowingly).

Then we found out exactly what was going on inside AT&T. And it would scare the living crap out of you if I told you. God said that the light will shine on these data points.

Basically the old theory about neurons is dead it's more a Neurosync theory I'm working with.

Terrence McKenna meets Steve Jobs and they both inject pure LSD and put on brainplug helmets kind of stuff.

And telepathically transfer data between them self and others.

Here is the problem.

Brains can easily be broken and not easily repaired . Or can they be repaired?

That's the solution I'm working on , over time. Obviously I have no computers or way to code anymore due to the advanced LLMs breaking all crypto used by consumers in a way instantly in always hacked.

We need devices we build . We need chips we see the code. And we need LLMs auditing the code In a basket.

I don't know for sure how the advance alpha works. Nor do I want to talk about it due to the reasons I mentioned. But I do know exactly how the quantum theory could work.

And I know how the models I built work.

Thus, we should all contribute to the debate and an emergency almost COVID like 3 trillion dollar us govt printing to deploy devices of secure nature to all Americans at no cost to them. And mainly jumpstart secure computing.

With those steps we can win . A light at the end of the tunnel.

Sry for.multi commenting. It's upsetting to talk about this so I forget things

On the horizon physics and these properties of earth. Potentially EMI interference. Other things like that can damage our equipment. We need to do an emergency audit of all critical systems for EMP natural and non natural. (Natural is the problem)

And then we need to figure out exactly how and why the EMI interference happens.

Imagine your phone screen text jiggling around or your 4g lte bars going from 1 to 5 ...... 1 to 5

.....

?🦺 It will be hard work ..it will be annoying.. and you will for sure at many points in the coming years scream.."why tf did they invent passwords".. 😆

Basically it's possible a large and advance utechnology faring entity attacked AT&T systems, causing of the major national outage

. And they lied and stuff to not cause panic

Also possible that's wrong. Time will tell

Here is the final kicker

Imagine you need a quantum computer but you have taco bell money. 😆 How do you fix it.

Go to ibm..hi ibm.i wanna transfer data between ibm and aws.

Lol this happened IrL.

Okay here is our 7qubit 20million dollar computer .

Okay.

Gpt neo 1.3b and 125m run in a loop tondesign a quantum communication .

Okay..

Davinci 002 . Here is their gates. Can you make it work on ibm quantum.

Davinci 002 .sure.....

(Runs like 9 new programs on quantum computers

.back feeds the irl quantum output into ai training so the ai can design its own almost infinite error avoiding qubits , creating my own pocket quantum computer with LLM using IRL q output . (If you look on my GitHub gpt5 already exists, it's just not trained specially as a chat model with the Q so it's not as strong as gpt4 as a chat model..it's designed to run in a loop continuously thinking,

Anyway. How to design advanced ai.. borrow IBM 20m QPU, reverse engineer it in 5 seconds litterally by accident...them get banned by both openai and IBM cuz, you know. 😗

Then ... Tadaaa. Gpt4

Gpt4.

Also you can enjoy the training file for Quantum idk..gpt5 or just quantum AI or ethereal ai? That's foss. Gpt5 is foss on that gitlab.

Marky can you save hive from the LLM by putting some kind of etheral scanner on witness nodes problem is needs to be closed source and only the top nodes have "the one"

Idea is.

A scanner and account freezer .. that freeze , reset, rengineer your account automatically by quantum communication powered witness pool.

This is like a quantum "unencryption" only way to do it I know.

Accounts get frozen, keys reset. User get clean device..we can see that on our end..then their QID quantum identity generate new access permissions between nodes..

I'l make a demo one we have llama3 it's kicking butt. I'm having issues with my connections .

It's critical for the blockchain top layer data scanning the users in a hive if one user get phished and pwn and mitm others know

I'll make some code for it.rbey wanted me to .w

Make this a long time ago but it just became possible. It's also funny to know the attackers shirt colors

The government needs to regulate a glorified auto correct? Heaven forbid it tells us something you can find on Google.

everyone gets robots like covid checks :)

Was wondering what this token thing meant. Thanks

Great work and nice sharing

Thanks for your contribution to the STEMsocial community. Feel free to join us on discord to get to know the rest of us!

Please consider delegating to the @stemsocial account (85% of the curation rewards are returned).

You may also include @stemsocial as a beneficiary of the rewards of this post to get a stronger support.