¿What's Hive's DQN after HF28? ...it's my MeMe #195

In general, things usually are what they usually are. But I think that with the right attitude, provision, motivation and willingness to improve them, it's always possible to refine the AGI human algorithm in the measure and to the extent that we set our minds to it.

Posts with rewards set to burn still pay curators.

Only author rewards are burned.

If you think this type of content should be eligible for author rewards, then «click» here.

Thanks for your support.

¿What is DQN?

DQN (Deep Q-Network) is a reinforcement learning method that uses deep neural networks to learn optimal actions in complex environments by approximating the Q-value function. Instead of a traditional Q-table, a neural network takes the state as input and outputs Q-values for each possible action. Allowing the agent to generalize across many states and handle high-dimensional inputs like, by instance, collective images. Key components include an experience replay buffer to store and randomly sample past experiences and a target network in order to stabilize training.

¿How DQN works?

- Q-value estimation: The core idea is to use a neural network to learn the Q-value function which estimates the expected long-term reward of taking a specific action in a given state.

- Input and output: The neural network takes the current state (e.g., an image from a game) as input and outputs Q-values for each possible action.

- Action selection: The agent chooses the action with the highest Q-value, but often uses an epsilon-greedy strategy to balance exploration trying random actions and exploitation although frequently choosing the best-known action.

Key techniques for stability and performance:

- Experience Replay: The agent stores its experiences (state, action, reward, next state) in a replay memory. During training, it samples random mini-batches from this memory which breaks the correlation between consecutive experiences and improves stability.

- Target Network: To prevent the target Q-values from constantly chasing the predictions, a separate "target" network is used to calculate the Q-target. The weights of the target network are updated less frequently, providing a more stable target for the main network to learn from.

What makes DQN effective:

- Handles complex environments: DQN can handle environments with a massive number of states that would be impossible to store in a traditional Q-table, such as those involving high-dimensional inputs like raw pixels from a video game.

- Generalizes across states: By using a neural network, DQN can learn to generalize from similar states enabling it to make informed decisions in new situations it hasn't seen before.

- Robustness: The combination of experience replay and target networks makes the learning process more stable and robust with a significant improvement over standard Q-learning for complex problems.

Summary of the analysis:

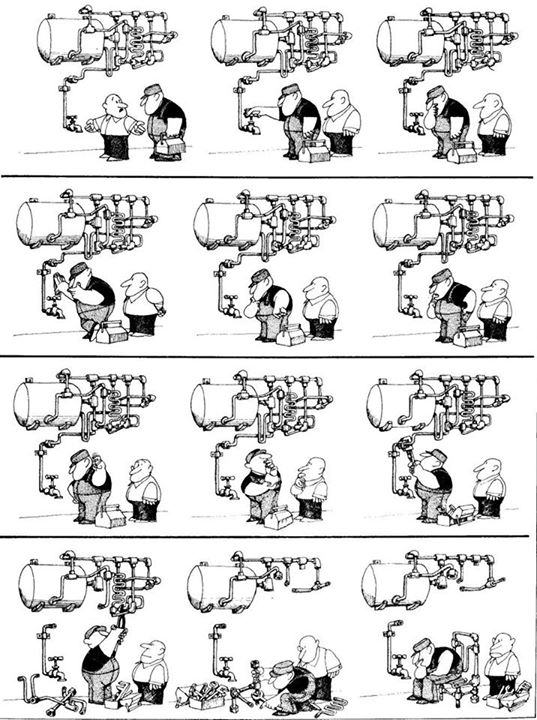

In summary, in Hive this separate "target" neural network which must be used to calculate the Q-target & Q-values at all times, in my opinion, always has to be the entire community. And taking seriously the opinion and recommendations of each and every Q-learning member of the ecosystem. Whether they are large or small. Likeable or unlikeable. With high or low stake and regardless of how much skin they may have in the game.

Because in this multicultural DQN game, a separate "target" network always is gonna be just that: ¡A Separate "target" Network! but with each important piece, gear and cog running within it holding so many diverse, different and essential Q-targets & Q-values, that all of them must be heard, examined, dissected, scrutinized and taken into account in unison if we truly want to have a well-oiled agent-machine that is capable of taking us to the goal. That's how it is, and that's how it should always have been.

For further info and tidbits on the topic, please watch this video below.

«««-$-»»»

"Follows, Comments, Rehives & Upvotes will be highly appreciated"

@por500bolos, you're rewarding 0 replies from this discussion thread.