The Turing Test and Machine Intelligence (part I)

👁🗨👨🤖 The Turing Test and Machine Intelligence (part I)

By Enio...

In 1950 the English mathematician Alan Turing published one of his most memorable and interesting papers in the area of computer science, which would inaugurate a long-lasting debate on the methodologies of scientific validity and philosophical implications of what would later be known as artificial intelligence.

This is not the first time we have talked about Alan Turing and his work. In a previous installment we mentioned how Turing wrote the first computer program to play chess, which less than a program was only an algorithm, since he never had the opportunity to implement it due to the technical limitations of the time. Turing, however, is better known today as the father of computer science, since his mathematical work cemented and formalized the foundations of this science.

⬆️ Alan Turing, British mathematician, computer scientist, critographer and philosopher. Picture: Jon Callas (CC BY 2.0)

Even in an era that would correspond to the first generation of computers (1940-1952), which we could metaphorically equate to the super order Dinosauria -you know, because of its ancient and still "underdeveloped"-, computers had already demonstrated much utility and potential as a technology of the future. Very soon the debate arose among academics about their limits and capabilities, and even about whether they could become as intelligent as humans, so the very father of computer science himself could not fail to make his contributions by raising conjectures and ideas in this regard.

Several of them are condensed in the paper Computer Machinery and Intelligence that he published in 1950 in the academic journal Mind (Oxford University), which at that time published contents on analytical philosophy. In that essay of 29 pages in its original edition, he discusses whether computers could be considered thinking entities, an issue that has scientific, technological, philosophical and psychological implications, some of which we will address in these posts.

Turing opens the essay with a compelling introduction that I cannot fail to quote:

I propose to consider the question, "Can machines think?" This should begin with definitions of the meaning of the terms "machine" and "think." The definitions might be framed so as to reflect so far as possible the normal use of the words, but this attitude is dangerous, If the meaning of the words "machine" and "think" are to be found by examining how they are commonly used it is difficult to escape the conclusion that the meaning and the answer to the question, "Can machines think?" is to be sought in a statistical survey such as a Gallup poll. But this is absurd. Instead of attempting such a definition I shall replace the question by another, which is closely related to it and is expressed in relatively unambiguous words.

And the new question he proposes has to do with a method he calls the imitation game, which is based on a game played by the Victorian aristocracy. Turing's version of the game involves a man (M), a woman (W) and an interrogator (I) of either sex. These three subjects are compartmentalized in such a way that they cannot see each other, hear each other or establish any kind of contact except through a teletypewriter (a typewriter-like device), so they can only communicate in writing. The job of I is to distinguish through his or her questions who is M and who is W. The woman will cooperate with the interrogator, but the man will not, for he must fool the interrogator into believing that he is the woman.

Turing then proposes to modify the game so that instead of M or W, a machine/computer C plays, and then the question Q2 is formulated as to whether the machine C will have, broadly speaking, the same performance as the human it replaces (M or W), that is, whether it will be able to convince or deceive the interrogator I with the same frequency/effectiveness as a human player M or W.

Turing thinks that this question Q2 replaces the original question of whether computers can think, and thus turns the problem into something clearly measurable and quantitatively analyzable. It is thus an experiment that, if properly conducted, should contribute to determine whether a computer has become as thinking as a human being, and this reasoning is based on the premise that human natural language entails a complex process that would require a minimum of intelligence and thinking ability.

This experiment became known over the years as the Turing test and has had modifications and implementations since then, as we shall see.

But let us return to the paper for a moment. After some other considerations, Turing makes a couple of predictions "by the end of the century," i.e., by the year 2000. In his view, by that time computers with 125 megabytes (109 bits) of RAM could play the imitation game well enough to the point that the interrogator will have no more than a 70% chance of identifying the machine, and that would be tantamount to saying that the computer passed the test. He also estimates that by that date expressions such as "thinking machine" would not be considered contradictory by society, since progress would be such that there would be no reason to continue to see it that way.

However, it is worth asking ourselves now: have these predictions been fulfilled? Have the computers of the 21st century passed the Turing test? Has research in artificial intelligence led us to the emergence of machines that can think? What do you think? It is a good thought exercise to try to answer these questions ourselves before moving forward.

Indeed, these are problems and challenges specific to the area of Artificial Intelligence, one of the branches of computer science, and it has really taken off precisely in this 21st century, especially with the discovery and application of techniques such as machine learning and others, in addition to the great processing power achieved due to cutting-edge technology, all of which has practically divided the conceptualization of artificial intelligence into two major types: the weak and the strong. The latter has to do with matching or surpassing human intelligence, where the artificial entity that possesses it must be able to solve general domain problems.

Since the basic conditions idealized by Turing demand manipulating and mastering human language, it is inevitable to think that guidelines can be established in the experiment where questions are posed to the computer to demonstrate its (hypothetical) ability to address/solve intellectual problems of any kind, communicating its answers to humans. Following this approach, the mastery of human language would not only be an end, but a means in artificial intelligence research.

The Turing test, therefore, could be valid as a benchmark of a strong artificial intelligence, although as we shall see, these claims cannot be formulated in such a cheerful and controversy-free way. But let us return to the question posed: have there ever been computers that have passed the Turing test?

Due to the sharp distinction that currently exists between hardware and software, in the jargon of computer science and engineering we do not necessarily speak of computers or machines that demonstrate intelligent behavior, but rather of their programs. These include chatbots, which are computer programs whose functioning to a lesser or greater extent resembles that of a human being, but specifically in terms of carrying on a conversation, thus fulfilling the sine qua non condition for the Turing test: using human language. Let's take a look at some of these programs.

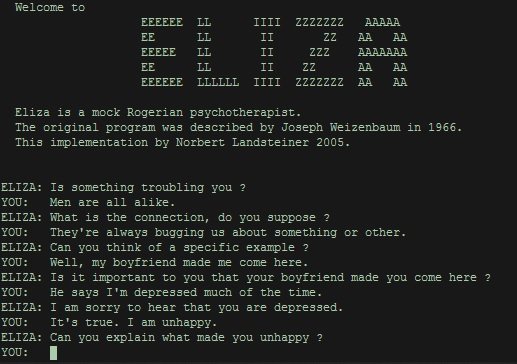

Among the historical cases of chatbot-type programs that have been successful in terms of fooling human beings with the little act of impersonating a person is Eliza. This program, created back in the 1960s, pretended to be a Rogerian-style psychologist, often repeating, restating or asking as a question what the "patient" user had just mentioned, thus maintaining the flow of the conversation; a very convenient technique for a first-generation chatbot with many limitations.

⬆️ View of Eliza program execution on a text interface. Picture: public domain.

Eliza was charming to her users, but she was also the inspiration for a later and more powerful chatbot called Parry which, in curious contrast to Eliza, pretended to be a paranoid schizophrenic patient. Parry managed the feat of even fooling not just any average person, but no less than practically half of a group of psychiatrists! Yes, the professionals who are the most qualified to recognize the authenticity of Parry's interpreted profile. -If these examples make you curious, don't worry, we will revisit and delve into these cases in a future installment.

In more recent years we have seen the emergence of myriad chatbots, many of which measure their capabilities at showcase events and competitions. The most prominent of these events is the Loebner Award, which has quite a history and has been supported by many universities. Held continuously since 1991, this competition implements a version of the Turing test in which several judges and several players (humans and programs) participate simultaneously. Its ultimate goal is to encourage the emergence of an artificial intelligence that can perform satisfactorily in a repurposed version of the Turing test that includes textual, visual and auditory comprehension.

Although to date no program participating in the Loebner Award has passed the Turing test, awards have been given to those considered more realistic, more human, such as Mitsuku, which is a celebrity among gamers, geeks and many tech natives who love instant messaging platforms such as Twitch, Kik and others. There is also the chatbot Eugene Goostman who has been a finalist in the Loebner Award, but also is credited with passing the Turing test in 2014 during a showcase event for the purpose of the 60th commemoration of Turing's death, where the bot reportedly outwitted 33% of the judges.

However, despite the success of programs such as Eugene, Eliza and Parry in carrying on conversations indistinguishable from those with humans, much of the scientific community continues to look askance at these achievements. In some cases, deficiencies in methodology are alleged, and in others, substantive observations such as the fact that these bots technically do not possess any ability to think, much less are they strong artificial intelligences. Critics believe that their developers are engaged in building appearances and resorting to conversational tricks to distract the judges, without actually demonstrating any ability to think.

Is this a flaw in the Turing test itself, is it a matter of appearances and not of authentic intellectual processes, or will computers never be able to think and be as intelligent as humans, and are there determining obstacles that prevent them from doing so?

Turing himself recognizes that there are all kinds of objections to the idea of thinking machines, although he also says in his essay that he is not interested in answering the question of whether this or that particular machine can think, but rather whether there are imaginable computers that can do so, that is, whether in theory there can be a computer that thinks, since from there to practice the leap could be made through engineering. These are issues that we will be addressing in a future article.

Some References

- Turing, A. M. (1950) Computing Machinery and Intelligence. Mind 49: 433-460.

- Saygin, Cicekli y Akman (2000) Turing Test: 50 years later. Minds and Machines: 463–518.

- Loebner Prize official website

- Eugene Goostman chatbot official website

- Mitsuku chatbot official website

If you are interested in more STEM (Science, Technology, Engineering and Mathematics) topics, check out the STEMSocial community, where you can find more quality content and also make your contributions. You can join the STEMSocial Discord server to participate even more in our community and check out the weekly distilled.

Notes

- The cover image is by the author and was created with public domain images.

Great review about all the advanced that allan turing brought to the computers!

!1UP

That's right. Thank you!

You have received a 1UP from @gwajnberg!

@stem-curator, @vyb-curator, @pob-curator, @neoxag-curatorAnd they will bring !PIZZA 🍕

Learn more about our delegation service to earn daily rewards. Join the family on Discord.

PIZZA Holders sent $PIZZA tips in this post's comments:

@curation-cartel(6/20) tipped @eniolw (x1)

Learn more at https://hive.pizza.

I have actually never taken the time to dig into the history of the Turing test (I barely remember a movie called "Ex Machina" that I have seen a few years ago). Therefore, I really enjoyed reading your post (especially the more modern part with current Turing test competitions).

I agree with your conclusions: will we ever be able to demonstrate that a machine passes the test, as at the end of the day it is only a matter of learning appearances? Obviously, this is somewhat different from thinking.

I will stop here, as I have nothing very clever to say (it is late). In fact, I don't know the answer to the latter question, and this is in addition very far from my domain of expertise...

I am looking forward to read the second part of this blog :)

Yeah, there are several interesting movies that talk about this test. I hope there will be computers that can pass these tests at some point. As for the questions, we'll continue addressing them in the next post. Thanks for reading and for your sincere comment!

You are welcome. I was happy to find your post that I almost missed. For some weird reason, I was not following you. If course I fixed this yesterday ;)

See you very soon for part 2!

Cheers ^^

Sure, thank you!

https://twitter.com/BenjaminFuks/status/1535028591842545678

The rewards earned on this comment will go directly to the people(@lemouth) sharing the post on Twitter as long as they are registered with @poshtoken. Sign up at https://hiveposh.com.

I had not heard of this scientist, but his work is more than interesting. In my opinion, a machine may not be thinking, but it may have the ability to imitate infinite number of actions of human beings such that it will be almost impossible to identify whether it is a human or not.

@tipu curate 6

Upvoted 👌 (Mana: 0/53) Liquid rewards.

Yeah, there is an argument in favor of that: it's all about appearances when it comes to building a machine with thinking capabilities. Hopefully there will be AI that are really conscious. Thanks for your comment and the support!

Thanks for your contribution to the STEMsocial community. Feel free to join us on discord to get to know the rest of us!

Please consider delegating to the @stemsocial account (85% of the curation rewards are returned).

Thanks for including @stemsocial as a beneficiary, which gives you stronger support.

Thank you!

Apparently Turing thought that it's impossible to fool a human into thinking that someone is thinking. We can be fooled into thinking that a straw inside a glass of water is bending, we can be fooled into thinking that a dream is reality, but if we think someone is thinking, we can't be wrong.

Next time I see the statue of the thinker from a distance and mistake it for a real person and form inside my head the notion that 'this person over there is thinking', that will be proof enough that rocks can think :D

Yes, it's possible. We'll address some of the objections soon. Thanks.

What about advanced language models, do you think they can pass the TT? According to this paper not yet, but it is close.

https://openreview.net/forum?id=Ct1TkmRmYr

Hey! I think it will eventually pass it. It hasn't done it yet as far as I know as the paper you cited shows. Based on my interactions with it, eventually I can tell it's not fully like a human. Of course, our direct interactions with it are not valid scientifically but one can learn from them to tweak the methodology. Thanks for stopping by.