Reacting to THE WAR: Debunking 'AI' - Part 2.3

Intro

Last year I had a brief exchange with @lighteye, who mentioned me in his article Mate in 19!? In the comments he invited me to examine a series of posts he has previously published that he entitled THE WAR: Debunking 'AI'. The title is partially self-explanatory, but it is only after reading the text that one better understands his views. Hence I invite you to read his posts and contrast your opinion on the matter.

I've already accepted LightEye's invitation and I have already started reacting to his second article entitled THE WAR: Debunking ‘AI’ – Criteria of a genuine AI (Part II of III). You can find the Parts 1 and 2 of my reaction here and here respectively. The current post is the third and final part (though there's one more post left of the original to react to). I will go through his ideas mostly in the order of his paragraphs, quoting verbatim to better represent him.

I hope that with this reaction the curious readers can learn a little more, clear their doubts and continue to form an educated opinion on the topic. Let's begin.

"Debunking ‘AI’ – Criteria of a genuine AI" Debunked - Part 3

First, let's review what we said in Part 2 about the proposed AI criteria popularized by many people and mentioned by LightEye in his article:

1. That the potentially intelligent machine should demonstrate self-sufficiency

Here we concluded that self-sufficiency, understood as the ability to fend for oneself and reproduce, is a more distinctive feature of life, whether natural or artificial. If we make further refinements, it can be said that all forms of life are self-sufficient, but not all forms of life are considered indisputably intelligent, although they may demonstrate varying degrees of intelligence. Therefore, it is not necessary to require self-sufficiency to define intelligence.

2. That the potentially intelligent machine should demonstrate curiosity

We concluded that curiosity is associated with intelligence, but we are of the opinion that it is not necessary. We can add that in the specialized literature it is studied in psychology, but also in biology. It is considered a psychological state, but also an instinct. This explains why many animals show behaviors of curiosity even if some do not consider them as intelligent beings. Ultimately, we saw that demanding this criterion of intelligence is rather a radical anthropomorphization of the concept of intelligence.

3. That the potentially intelligent machine should possess sense of humor

And this is the confirmation of the idea of radical anthroporphization. For many non-specialists, a genuine AI should be as close to human intelligence and behaviors as possible. It is true that human cognition is a useful reference, but it is not the goal of AI projects for specialists to create an artificial "human" mind. In any case, we saw that there are many advanced language models that are able to understand and conceive jokes, demonstrating a sense of humor, although never in a perfect way.

On this occasion, we will analyze the last aspect of artificial intelligence that the author of the article questions: creativity. Let's see how he puts it:

There are people who admire the ability of ‘artificial intelligence’ to write an essay on some literary work or to create an oil painting, completely in the style of a great painter. It’s just that in reality all of that has nothing to do with intelligence, but again, with the amount of data entered into the expert system. (LightEye.)

I'm afraid that this statement is erroneous for several reasons.

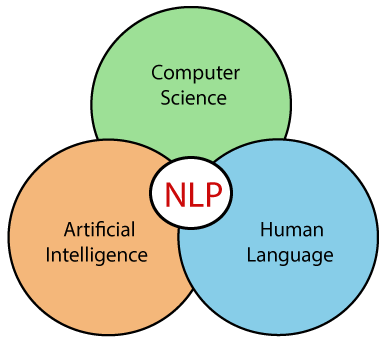

First, as has happened throughout his series, the author confuses expert systems with other applications of artificial intelligence that do engage in creating images or composing essays. Expert systems are programs that simulate human reasoning in a specific area of knowledge, but they are not the only ones that can generate creative content. There are other AI programs that use techniques such as machine learning, neural networks or natural language processing to create works of art, literature or music.

Second, LightEye downplays the ability of artificial intelligence to write essays or create oil paintings, as if it were trivial or irrelevant. However, this is a sign of the evolution and advancement of AI research, which has managed to overcome many challenges and limitations that were once considered impossible.

A few decades ago, computers were criticized for not knowing how to write or paint, and now that they know how to do it, they are denied merit or intelligence as if to say "it's no big deal, after all". Natural language processing and image generation are very complex and demanding areas of research, requiring a lot of creativity and skill.

⬆️ Natural Language Processing is an interdisciplinary and demanding area, not a triviality

(Wikimedia Commons)

Third, if writing essays or generating images in the style of oil painting is not a criterion of intelligence, then what is? Are there not linguistic intelligence, visual-spatial intelligence, musical intelligence and others, which have been proposed and recognized by theories such as Howard Gardner's multiple intelligences? It could be argued that by denying the intelligence of AI, the author is also denying the intelligence of humans according to that theory.

Essays are easy to compose from pre-existing saved considerations. The more famous the work you want to write an essay about, the better the ‘composition’ you will get. (LightEye.)

Again, the author underestimates the difficulty and complexity of writing coherent texts from pre-existing information, both for computers and humans. Copying and pasting is not the same as analyzing, synthesizing and composing. There are all kinds of texts, styles and genres, such as narrative, poetry, prose, argumentative, informative, descriptive texts, etc., and artificial intelligence has demonstrated its ability to make incursions into all of them, with surprising and original results.

It has not been easy for human beings to find a way to program the computer to do so; it is a whole process. It is not a matter of programming heuristic rules on what to write, as the author believes, but of applying automatic learning techniques, based on the inductive method, to learn to write from existing information. This process requires a lot of creativity and intelligence, both from the humans who design and train the AI programs, and from the programs themselves that generate the texts.

It is true that the more information available on a topic, the easier it will be to write about it, but that does not imply that the text will be better or more creative. On the contrary, sometimes the lack of information can stimulate imagination and innovation. If LightEye believes that humans become more creative than AI in the absence of information, he should present evidence to support it, not just an opinion. Anyway it still seems to me an interesting hypothesis to investigate.

As for the pictures, you can check the creativity of the ‘artificial intelligence’ very easily. There are already a lot of examples online where ‘AI’ paints people with strange anatomy, six or more fingers, or no fingers, or no toes, or three legs. (LightEye.)

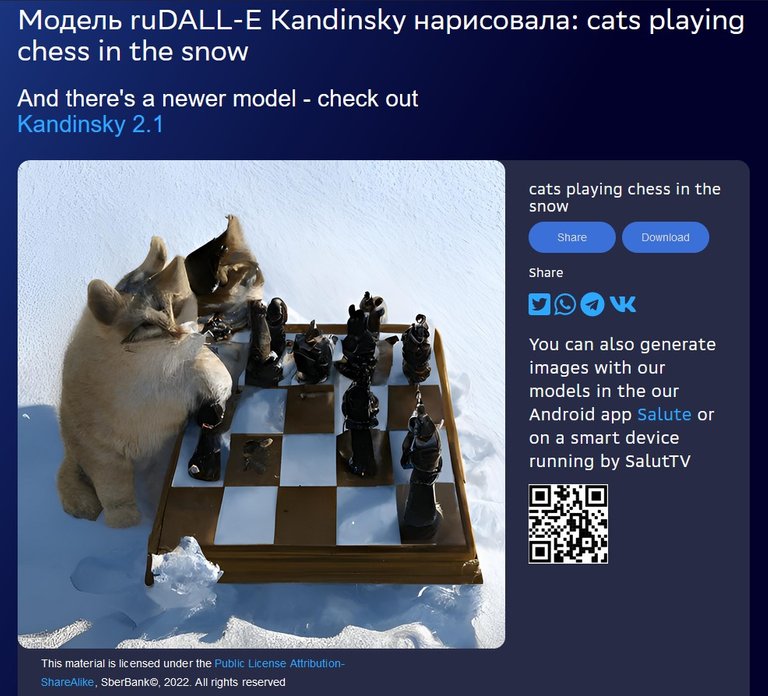

For example, one of the best free image generators with ‘artificial intelligence’ ruDALL-E.com reacts like this to the query ‘cats playing chess in the snow’ (LightEye.)

To support his point, Lighteye shows the following images:

|  |  |

|---|---|---|

|  |  |

It is true that AI-generated images are not perfect and that is the point: they are not perfect and may never be perfect. In fact, even the best human artists have made anatomical, proportion and other "mistakes" that could be minor or major. Are we to think that these painters have no creativity or intelligence for having made these "mistakes"? It is clear that the reasoning is suspect.

⬆️ Dance - 1910 - by Henri Matisse. Dude, I'm not sure those curves and colors are strange and aren't meaningful to me. Looks like Henri Matisse doesn't have much "creativity" after all according to LightEye 😉

(Dennis Jarvis (CC BY-SA 2.0 DEED))

The problem is that LightEye confuses perfection with creativity, assuming that only error-free images are creative. However, creativity is not measured by precision or accuracy. Being creative is supposed to be about being able to generate something new, different or valuable. Creativity involves experimenting, exploring, innovating and taking risks, which can sometimes involve mistakes or imperfections, but that does not mean that creativity is less or non-existent. On the contrary, I have heard many times, especially in songs and poems, that mistakes can be a source of inspiration or beauty.

Another problem is that critics like LightEye ignore the context and purpose of AI-generated images, judging them only by their appearance. I am not an art expert, but many times these "mistakes" are not bugs, but features of the style. The type of composition will depend on the expectations and artistic styles applied. Did you know that abstract art exists? Expressionism? Surrealism? Cubism? Fauvism? etc.

That is why not all images have to be realistic or natural, much less perfect. It is also undeniable that there is an element of subjectivity there: What for some may be a mistake, for others may be a characteristic or something intentional and welcome.

But that is not the worst part of the argument given. As with the previously discussed case of the composite chess puzzle that the AI could not solve, LightEye generalizes its questioning of the AI's ability to generate images creatively by making the same logical and methodological errors: he relies on a biased and limited sample of images, which do not represent the diversity and quality of AI-generated images.

The author selects only the images with imperfections or (seemingly) little meaning, ignoring those that are realistic, original or impressive. This is a case of cherrypicking, a fallacy that consists in choosing only the evidence that supports one's own position, and discarding that which contradicts it. There is also a negativity bias there by only paying attention to the negative aspects of the image, ignoring the positive ones, which are more numerous.

⬆️ Confirmation Bias

(Public domain)

Let's continue to pay attention to the article:

So, there is probably no bigger database of photos on the net than the one with cats. Chess also has a lot of photos and information, and yet the ‘artificial intelligence’ can’t put together anything meaningful. Here is an example with the same query on the Craiyon.com platform: (LightEye.)

And this is the photo he attached:

I don't know, it looks pretty good to me. It's not a 'masterpiece', but it has a decent accuracy considering it was drawn by an AI and not by a human artist. This is my subjective assessment, but I think others with basic knowledge of the capabilities and limitations of AI will agree with me.

I think it is important to understand that it is not about reproducing an exact photograph of cats playing chess in the snow, but to create an original, different and maybe funny image. That is to be creative in the face of a somewhat absurd request (cats playing chess looks like something out of a cartoon).

Now, can an average person produce the same? Can LightEye or I paint cats playing chess in the snow better than this? After all, it's a silly request. I don't know. This recrimination is just a reminder that it is healthier to evaluate all the data and pay attention to central tendencies, rather than generalizing based on outliers. We should not make hasty generalizations based on a few images that may not even be the most representative.

Once again, it is true that not all AI-generated images are perfect and super meaningful (although that seems subjective), especially if we are looking for a realistic and natural style. The expert developers of these AIs are constantly improving them to increase their indicators of success and quality. So we may well have high standards of quality, but we must also resist confirmation bias and negativity and recognize the merit of AI programs when they demonstrate a creativity different from our own.

The inability of today's expert systems, among other things, is caused by the fact that they were created (programmed) from the beginning with a very specific purpose that has nothing to do with intelligence. (LightEye.)

I suppose he is referring to the "inability" of the AI program to draw 100% of the time realistic images of exceptional quality. This same inability is present in most of the human population and that does not mean that we think they do not possess intelligence and creativity. The fact that the focus is also on "inability" is again reminiscent of the negativity bias, as it could rather have been posited as the limited ability of the AI program.

LightEye acknowledges that the AI program (and not expert system, as he keeps confusing) has been "created for a specific purpose". Yes, that is purpose-driven AI and it does have to do with intelligence for the reasons we have discussed in the past article.

As a reminder: Intelligence does not have an ultimate, agreed-upon, universal definition. In the field of AI research it has been defined, among other things, as context-, goal- and medium-dependent. It seeks to replicate cognitive processes such as reasoning, perception, problem-solving, learning, etc. Therefore, the program is said to have artificial intelligence.

Let’s look at a reverse example of trying out ‘artificial intelligence’. There's a great woman at Bastyon.com platform who tests ruDALL-E.com with the oil painting portraits and very often sends her own photos of poppy flowers. She recently started experimenting with nonsensical input, and just look at the result: (LightEye.)

Honestly, I didn't understand this part, so I can't comment on it. However, the images he attached are quite nice.

So neither intelligence nor creativity, but obviously there is data about what a person likes! There is control and surveillance! The danger is real. And that brings us to the key question that we are going to answer in the final and scariest part of this series: Who needs fake artificial intelligence and why? (LightEye.)

Does he mean that the more private data the website or AI application collects from the user, the higher the quality of the results? It is not clear to me, but from a technical point of view it could make sense. The AI could use extra information to improve, learn and generate something new. All in all, the accompanying discourse is alarmist and confusing. However, I don't see how you can get there by assuming that AI programs don't really demonstrate intelligence and creativity.

Finally, the "control" and "surveillance" thing is something the author has hinted at in his articles, but has not developed yet, but will certainly do so in his third and final installment on which we will react as well. See you there.

Notes

- Most of the sources used for this article have been referenced between the lines.

- Unless otherwise noted, the images in this article are in the public domain or are mine.

Thanks for your contribution to the STEMsocial community. Feel free to join us on discord to get to know the rest of us!

Please consider delegating to the @stemsocial account (85% of the curation rewards are returned).

You may also include @stemsocial as a beneficiary of the rewards of this post to get a stronger support.

Nice critique. Apparently we still have a very long way to go, especially with regards to this 👇

Yes, it's a complicated physical process humans haven't fully described and explained yet.

Thanks.

Reading this was a eye-opening in an array of ways and perspective. The critique is sound and open the waters for a lot of other things not involving AI. Concerning AI becoming perfect in image generation I think that is achievable in the long run, the question is "How long in the long run?"

Probably not too long from now. A decade in computer science and technology is like a century of progress in other fields, to put it that way.