Reacting to THE WAR: Debunking 'AI' - Part 1.2

Intro

I recently had a brief exchange with @lighteye, who mentioned me in his article Mate in 19!? In the comments he invited me to examine a series of posts he has previously published that he entitled THE WAR: Debunking 'AI'. The title is partially self-explanatory, but it is only after reading the text that one better understands his views. Hence I invite you to read his posts and contrast your opinion on the matter.

I have accepted @Lighteye's invitation and have already started on the first part. Please read it to understand the context. Here I will develop the second part of my reaction to his article entitled THE WAR: Debunking ‘AI’ – Chess Intro (Part I of III) which discusses two of my favorite topics: AI and chess. I will go through his ideas mostly in the order of his paragraphs, quoting verbatim to better represent him.

I hope that with this reaction the curious readers can learn a little more, clear their doubts and continue to form an educated opinion on the topic. Let's begin.

"Debunking ‘AI’ – Chess Intro" Debunked - Part 2

In part 1 we left off where @ligtheye was arguing that there is no such thing as "Artificial Intelligence" and that saying that man cannot beat a machine is wrong because:

- Chess is not a game of intelligence, but a game of memory. (Lighteye.)

and

- Intelligence cannot be measured by only one highly abstract game of memory, no matter how complicated it may be. (Lighteye.)

We saw that the first premise is not easy to accept, at least as it was stated and explained, and that there are more appropriate ways to categorize chess in mathematical and computational terms, such as zero-sum game, perfect information game and high complexity game.

With respect to the second premise, it can be granted without problems, as long as we have a definition of intelligence as a reference, which we did not have in his post and which, I'm afraid, makes the whole argument a play on words.

But let's continue paying attention to how he elaborates this idea:

Regarding the second point - it is easy to see that the machine has no intelligence from the following example. This is a game played on LiChess between users smurfo (white) and ukchessbomber (black). After the 28th move, the following position was created: (Lighteye.)

Even the average chess beginner will easily judge this position as completely winning for white. However, the strongest chess machine in the world at the moment – Stockfish – declares this position at the first glance as convincingly better for black, and even by 5.6 points! (Lighteye.)

The position that Lighteye shows in his example is a composed position, that is, it has been intentionally crafted and did not come from a practical game (although sometimes they can indeed ocurr in practical games). This is important for a point I will make later.

The composition develops an aesthetic motif in the form of a paradox: Black possesses an immense material advantage, which in the vast majority of chess situations represents a sure victory, but this is a notable exception, since Black's pieces are trapped and cannot actually participate in the game. White, although possessing much less material, has enough pawns that he can actively participate in the fight and outnumber his opponent's active forces, resulting in an eventual decisive advantage.

The Stockfish chess program, whether the AI version or the classical version, experiences difficulties in evaluating this position correctly, most of the time for a short time and sometimes for a long time.

⬆️ Stockfish Chess Engine Logo (picture: Stockfish website).

Lighteye's argument is that the inability of the engine, or the difficulties it has, to correctly evaluate this position shows that it is incorrect to consider that the engine demonstrates intelligence.

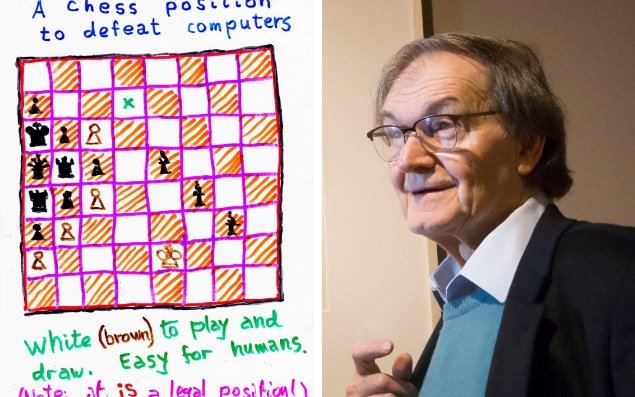

This type of reasoning has been heard before. The most notable case was that of the well-known physicist and mathematician Roger Penrose, who is a lover of puzzles. He came up with a chess puzzle that in his opinion "may hold the key to human consciousness". Sir Roger Penrose said the following about his composition:

If you put this puzzle into a chess computer it just assumes a black win because of the number of pieces and positions, but a human will look at this and know quickly that is not the case

[...] We know that there are things that the human mind achieves that even the most powerful supercomputer cannot but we don’t know why. (Roger Penrose.)

⬆️ Roger Penrose and his chess puzzle (picture: The Telegraph).

The truth is that his result was confirmed by other people. The chess engines at the time really misjudged the position.

The fact that such a prominent person as Penrose commented on chess was well received. What was not so well received by the chess community, or at least did not make an impact, was the quality of his composition, which is indeed defective and unimpressive. This is curious, as his brother Jonathan Penrose is a Chess Grandmaster.

Moreover, this type of engine behavior was already well known to chess players. It is known that fortress positions and such have always been their Achilles heel.

On the AI-related side, commentators and experts were skeptical about the validity and relevance of Penrose's example. The inbox of the Roger Penrose Institute was full, receiving corrections during those days.

So what is the problem with this kind of argument? The simplest explanation comes from the chess engine programmers themselves, who have stated that a conventional engine is not ideally designed to solve this type of problem. It's not even made to find checkmates as efficiently as possible. In fact, there are separate versions of Stockfish or other engines that specialize in searching for checkmates.

So what is Stockfish for? Stockfish is made to search for moves that minimize its losses while maximizing its gains, in other words, it is made to play chess strongly. Features that show no gain in playing strength, no matter how intuitive or relevant they may seem to a human being, are not included in its programming.

This is quantified by tests where the engine is tested against other versions of itself and against other opponents. As I mentioned, if the feature (for example, the resolution of certain types of compositions) does not pass the test, it means that they did not provide Elo gain (rating points), so it is not included in the engine programming.

So Stockfish is specialized to do this, to perform well when playing chess, but that does not mean that its ability is not exploitable in solving other optimization problems, such as searching for mates and solving composed puzzles. In fact, it can prove to be highly useful in the vast majority of cases, because its algorithms allow it to search for solutions following a general method. It is just that it is not specialized in it, nor is it perfect.

As to whether this difficulty proves that Stockfish is not "intelligent", in fact such examples have already been shown to be irrelevant for the purposes of AI applied to the engine. We are talking about a technical element in the area. Stockfish 16 employs an evaluation function called Efficiently updatable neural network (NNUE), which allows for the integration with other techniques such as alpha-beta search. This neural network is a mathematical model and a very useful technology for problem solving.

Now, this is why it is argued that Stockfish does demonstrate Artificial Intelligence:

It is a program capable of finding solutions to specific problems and uses generalizable methods, not just a prescribed knowledge base. It also tries to accomplish its goal as efficiently as possible.

It applies Artificial Intelligence models and technologies, such as the neural network NNUE. Even the classical version is still considered an example of Artificial Intelligence by using heuristic algorithms.

Its neural network is able to undergo training, learn and improve.

I know Lighteye thinks differently about what should be considered "intelligence", but that's a topic for another post since he didn't develop it in the post I'm discussing (I think he should have started there). However, what I mentioned are the reasons from a technical point of view why Stockfish is considered an example of Artificial Intelligence.

Regarding the comment "Even the average chess beginner will easily judge this position as completely winning for white", ok, it is funny, but also strictly speaking irrelevant. It is true that it highlights something paradoxical and curious, but it is important to understand that we cannot do more than that: we cannot generalize with it and form a verdict.

It has methodological and logical flaws to think that it does. This is shown, for example, by giving 10 other random chess positions to the chess beginner, asking him to evaluate them and comparing his evaluation with that of the program. He will not do better than the program.

In fact, it will even be extremely unlikely that the best human players in the world will evaluate and solve the 10 positions better than the engine. Does this mean that the Grandmasters are not intelligent? That would be the logical consequence, considering the argument as given. We can see there's a problem with it.

⬆️ I'm sure if Stockfish could talk, he would say something like this about human chess 😂 Credit: Stockfish Youtube Channel

The example makes it perfectly clear that there is no intelligence involved (Lighteye.)

An alternative reading is that this example makes it perfectly clear that it is problematic to make generalizations about a minuscule sample (one party) that has also been viciously selected. It also reveals personal incredulity and a play on words as there is no definition of intelligence yet. By the way, Penrose, of course, was careful not to dabble in these logical and methodological problems.

Chess machines have two elements: Database and Search Module (Lighteye).

The two main parts of a chess engine are the search function and the evaluation function. The database is not indispensable, but it can make the engine stronger. These would be the opening book and endgame tablebases.

The machine itself cannot think or learn (Lighteye).

Machines can learn. In the field this is called, guess what? Machine Learning and it is a very technical and very long topic. I have already mentioned that Stockfish incorporates this technology with NNUE.

Regarding whether they can think or not, again, it depends on operational definitions, but even if we resort to rigid tests like IQ tests or academic tests, large language models like ChatGPT and others have been repeatedly reported to have achieved scores higher than 99.9% of humans. There is no way to pass those tests without resorting to some kind of reasoning; it is not a "copy and paste" database.

It only knows how to enter every new game that is played on Lichess into the database, and thus make it available to the search module. (Lighteye.)

Lichess has that functionality yes, the one of offering its database of games. It is fed with games that are played on the server. That is different from the chess engine and has nothing to do with it. The engine is used to analyze some of those games, but the engine does not depend on that database.

It is not true that man cannot beat a chess machine (Lighteye).

Chess programs have outperformed humans for decades and that, paradoxically, made chess less interesting in AI research or at least for journalists. It is possible for a human to beat a chess engine in controlled conditions, it is just highly unlikely.

Knowing this, can we find a weak point in that expert system? Of course! It’s not true that people can’t beat him – it’s just that whenever you beat him, you’re actually leaving him with a new data input that the system will use in the his database so that he can’t be defeated the same way next time. (Lighteye.)

Stockfish is not an expert system, not in this sense. Neither Stockfish nor any other conventional chess engine depends on a database to work. They can connect to databases, the most accurate of which is the endgame database. I hope you appreciate that you are not clear on how chess engines work and how they are developed.

Here's how Jonathan Schrantz, a chess instructor from Saint Louis, a man who doesn't even have a FIDE rating, beats him (Lighteye).

Ok. I guess you, me, anyone can do the same, maybe with Stockfish set to level 8 or lower. Anyone can beat Stockfish if you purposely weaken it, just like anyone can beat a laptop in boxing.

Lowering Stockfish's strength is a valid feature of the engine and is useful in human training. We can't draw conclusions about these games, other than that Stockfish shows poor performance when it is leveled down and given little time to analyze, which is what is expected.

However, I see Lighteye's point is that the engine is weakened because it "doesn't have much time to access its database". As I mentioned, a chess engine doesn't work like that. If the right move is in a database, believe me, assuming you have a good internet connection, no matter how fast you are at moving your keyboard and mouse, you will not be able to interrupt the time needed for the database query to complete.

And by the way, Jonathan Schrantz does have a FIDE rating as far as I can see ;)

This chess-like introduction was necessary for the sake of the point - we are certainly not talking about Artificial Intelligence, but about a highly developed expert system (Lighteye).

Stockfish is not an expert system, not as it was defined: as a database-dependent program. No, Stockfish is not that.

Stockfish since 2020 incorporates a NNUE type neural network, which is an Artificial Intelligence model and technology. The engine does demonstrate intelligence based on that conceptual definition at least.

This is my a posteriori conclusion on the blogpost author's assertion that there is no such thing as Artificial Intelligence. I also did not see the idea of natural stupidity developed. It sounded curious.

In the following posts, we will present what features true artificial intelligence should have, the dangers looming behind the developed expert system, we’ll talk about the philosophical-ethical aspects of development, and most importantly, answering the question: Why would someone stick a false label that says 'Artificial Intelligence' on the developed expert system?

Stay tuned! (Lighteye.)

Sure. I will read and share my impressions on that post as well. I hope you -dear Lighteye- don't mind my replies, especially because I point out the issues that I encounter, although I also recognize what was correctly stated. I am a professor of Computer Science and I am also a chess player. I am partially qualified to talk about this and my response may help educate others.

Cheers.

Notes

- Most of the sources used for this article have been referenced between the lines.

- Unless otherwise noted, the images in this article are in the public domain or are mine.

Thank you!

Thanks for your contribution to the STEMsocial community. Feel free to join us on discord to get to know the rest of us!

Please consider delegating to the @stemsocial account (85% of the curation rewards are returned).

You may also include @stemsocial as a beneficiary of the rewards of this post to get a stronger support.