AIAgents: How De-Railing is Easy - from Rabbit holes to Opinions

As you may - or may not know - I am always a bit late to the party, whatever the party is. IRL. In digital space. In almost everything. Except, when I agree on some deadline, I keep that deadline. Rarely, very rarely, I let the deadline for what it is, delivering not in time. But but but, this is not the topic I wanted to write about today.

Years ago, much before the LLM (Large Language Models) was introduced to the general public through ChatGPT (was it version 3.0 or 3.5? I don't remember anymore), I found myself in a classroom being taught about what AI is, what AI can do for us, what AI algorithms are around, how models are trained, how we can play with such models, how we can train a model ourselves. The key message: "AI will not replace humans, but assist humans".

Am not sure if I agree with that key message. I mean, I think AI will have the ability to take over most to ALL human tasks, from production to creation, to conceptualisation, to innovation, to Nobel levels of research. The question is not IF, but WHEN. Perhaps such AI will even have some form of consciousness; Perhaps it won't. Likely, it won't since we don't know what consciousness is. But let's assume it is too complex for us - including AI - to ever understand what it is; Let alone, to try and create an artificial one. Still...

I am 100% sure AI will become intelligent enough and human-like enough, no human on earth can distinct AI from a 'real' human anymore.

For a reason I used the quotes for the word 'real', since when AI is 100% like a human, can't be filtered out anymore by humans, we shall revisit this question and determine what a 'real' human is.

Back to the classes...

I was quite loud during the training sessions regarding the strong statement: "AI will not replace Humans but assist them!", by asking things such as on what grounds and information they make such hard conclusions. Why does one keep thinking in/from their own single-sided views? Remain in their tunnels? Why do they not open their minds? Why they can't extrapolate whatever we've seen in the past becoming a reality? Why stuff that sounds Sci-Fi, remain Sci-Fi in their minds? Added to that, I didn't get any of the other students asking similar questions. They seem to be ok with such closed views. Or, they may not have any opinion. They may even be too lazy to speak up. Or, perhaps they don't like confrontations! Honestly, I, kind of hope it is the latter.

Anyways .. My voice was more or less binned because I was on my own in a class of 20-odd people; Since it was against what they intended to bring across to the class (literally, the latter was the trainer's answer, after I pushed, pushed, pushed for an explanation).

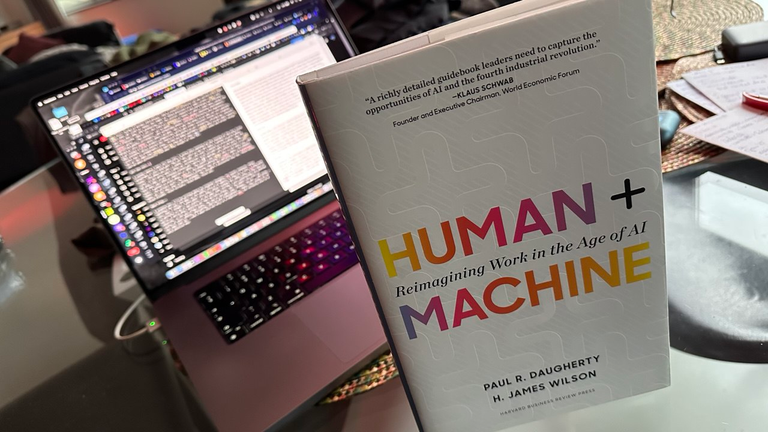

Note that the trainer not only trained private classes but also politicians one-on-one and in groups. Like those from my own country (the Netherlands). But also those residing in Brussels (the EU). They found their foundation with a book written by two employees from Accenture. The book: "Human + Machine: Reimagining Work in the Age of AI". Written back in 2018, not long before I took the mentioned classes.

Over the years, perhaps even a decade or more, I have seen many such books, written by peeps with direct ties to the industry, like Deloitte, Accenture, but many others as well, like factories, logistics companies and whatnot. They all paint the picture: AI will not replace their own jobs. AI will not be able to become creative. AI will always be bound to what humans put into them.

They are so wrong!

However, I have no direct means to convince you of such a statement. I suppose time will tell. At this stage, it is more a belief than a science, determining what AI can develop into.

We've seen nothing yet!

That is a given for me.

Wasn't it a half year ago the first AI emerged, creating podcasts from text it got fed? Wasn't it a few months ago the next iteration was made public through NotebookLM? Sure, it sounded very artificial: no intonations (or the wrong ones), no emotions, no human-like flow and such. Wasn't it 1 or 2 months ago when emotional AI entered the game, mixed into NotebookLM, giving the discussion on stage between two AI voices quite a human feel? Including the ah's and uhm's! And what about the next thing: the ability for a voice AI to be interrupted, to listen to others (AI agents, humans, whatnot) and to respond in real-time?! It is not around the corner; it is available today! NotebookLM. This is just the beginning.

Fuzzy logic...

What seemed to be fast, wasn't fast at all. A world of research preceded ChatGPT. Did you know, AI was invented all the way back in the 60s of last century? About 60 years ago? Did you know, already back in the late 80s and 90s, big-ass applied research was ongoing to make AI useful for a large variety of use cases? I worked myself on a project like that when I was still trying to become (and behave) like a research scientist. AI was called Fuzzy Logic back then. We tried to reduce the number of false positives on a radar screen. Can't tell you this ever went into production, but the initial research and simulations we executed, were promising. Then I left the field of research in favour of what I liked more, being the center point - 'the connector' so to speak - between sales people (business development), marketing people (strategic), and technology people (software architects and engineers). Being at this center point wasn't my goal, but it was the means to an end, my real passion. I am trying to drive product, proposition, pitch and create tailored stories for targeted B2B customers while winning the hearts of key people at our prospects, driving our contract stacks to unimaginable sizes. Driven by happy customers, being able to win their hearts, but at the same time helping them to step out of their own comfort zones and accept things can be done differently from what they thought of thus far.

Off the rail...

I wonder where I went off the rail. I wrote this sentence at the closing of the very first paragraph of this blog post "But but but, this is not the topic I wanted to write about today." The above was not, I repeat, was NOT, the stuff I wanted to write about. And the 'late' to the party wasn't about my introduction to AI (already back in the early 90s, like 30 years ago) or my training classes well before LLMs emerged, well before Covid (it must have been late 2018 or sometime in 2019). The topic I feel being late to the party for, the topic I wanted to write about, are all the AIAgents emerging in crypto space. The AIAgent Memes. The utility stuff. The framework platforms that we see emerging. All the promisses made. All the shit that is being released. The few gems I discovered thus far. Think AI16z. Think ElizaOS. Think Virtuals. Think DOAS. Think LAI.

Next blog post...

I promise you, I'll be writing about my original thoughts, my recent research. After spending the last few days in the rabbit hole of AI Agents, I know for a fact, I know much less than I thought I knew about this topic before finding the rabbit hole. I also know it is possible to be in two states: at the same time! Perhaps I just outperformed the quantum world, a world where anything can have multiple states, but the moment the state is observed, it has one unique state, not two at the same time. Anyways, my two .. uhm three .. states at once: Amazed, Bewildered, Baffled and Flabergasted .. Oopsy, four states all at the same time 😆 ...and I mean this not only in a negative way, but certainly also in a very positive way.

NJOY 2025

!LUV

@edje, @ydaiznfts(3/10) sent you LUV. | tools | discord | community | HiveWiki | <>< daily

🥰

I can see uses for 'AI' (it may be more simulated intelligence), but I don't like the idea of replacing human creativity. These tools tend to be derivative as they are trained on what humans do. As others have said I want machines to do the boring stuff and let me be more creative.

I get you 100%.

The question is: are we humans able to direct and foremost limit technology developments?

Technology can be driven by the markets, but is there enough money out there to fund the massive processing required by these tools? For now people may not want to pay for creating silly images and getting wrong answers to their questions.

When the 'market' decides, then I assure you, we will end up with AI being that good that humans can not make a distinction between AI and a human. At the same time we end up in a world where AI does everything. Perhaps because we want to, or perhaps because AI decided.

The question is only: When?

Processing power, sure, it needs that.

Even in the scenario in which no entity, or collective of any kind, has sufficient money or wants to spend enough money for the development of Super AI, or AGI, or however we want to call it. Even then, it will happen. Namely, when the energy costs are down to (almost) zero. This may take a few decades, perhaps half a century, maybe even a whole one. But it will happen. Since there is no reason to believe energy will not become free to use at some point in time. Renewables + AI + Robotica = Free forever (disclaimer, as long as all the other resources needed to create and maintain systems are available).

This one’s definitely going to be something we grapple with over the next 5 years. I don’t think we’ve got longer than that before, if it’s going to, things will turn wild.

I am seeing cracks in the almighty “AI” gods - with the introduction of AI people on Fakebook that’s a sign that they are running out of data to mine from real people and will need to use fake people. I’ve seen many things where AI bots talking to other AI bots and pulling information from them ends in a lot of useless and nonsensical shit. Yes they are smart but so aren’t humans! Plus we do all of this with the flesh we have.

There was some science I heard about a few months ago where they turned a message into blood, and translated it. Something along those lines but meaning that the human body is the most intense super computer there is, even if we don’t realize it.

I didn't hear/read about the blood thing, so I can't judge if your statement is relevant. We've seen AI creating their own language because (likely) this was what AIs amongst each other preferred for some reason. As I mentioned in my blog post, we haven't seen anything yet. Just a glimpse of what is/will be possible. Regardless if we exhausted all available information already, or not. Note: I doubt we exhausted all 'available' information, I suppose we may have used all easily available information, whatever that is. Time will tell where this all heading to. But also on this front, I don't have in-depth knowledge.

Thanks for your contribution to the STEMsocial community. Feel free to join us on discord to get to know the rest of us!

Please consider delegating to the @stemsocial account (85% of the curation rewards are returned).

You may also include @stemsocial as a beneficiary of the rewards of this post to get a stronger support.

I've only seen science fiction become reality many times, so.... I share your opinion and your states too, but what is reality? If we go to the quantum there are different realities and it all depends on what we can see, what we are able to access at the level of consciousness... of course consciousness is not a simple thing and since long before the 60's it is understood as more things than one thing... I don't know if you understand me...I don't really know what I write or what I think but I try to see the best scenario. 😂 What is the best scenario? We have always been at that point where everything is questionable and maybe that's why there is a world of asleep and a world of awake and... ok. I follow you in your reflections in the next post.

You are pretty funny. But at the same, the whole topic of 'what is reality' is a real topic and has many different sides to it. Indeed, what is real? Somehow, I like the Holographic Universe model in which we are not real; But we are also not holographic projections. More like, we (everything in our universe) are anomalies in a more or less homogeneous fabric without directly specifying what fabric is, whether this still has the concept of atoms, quant and such, or not at all.

😱😅

Congratulations @edje!

You raised your level and are now a Dolphin!