Local AI Chatbot: I Downloaded Ollama with WebUI

For the past week I've been learning more and more about AI (Artificial Intelligence) and LLMs (Large Language Models,) and I built foundational knowledge as I unlocked my first local AI Chatbot on my machine.

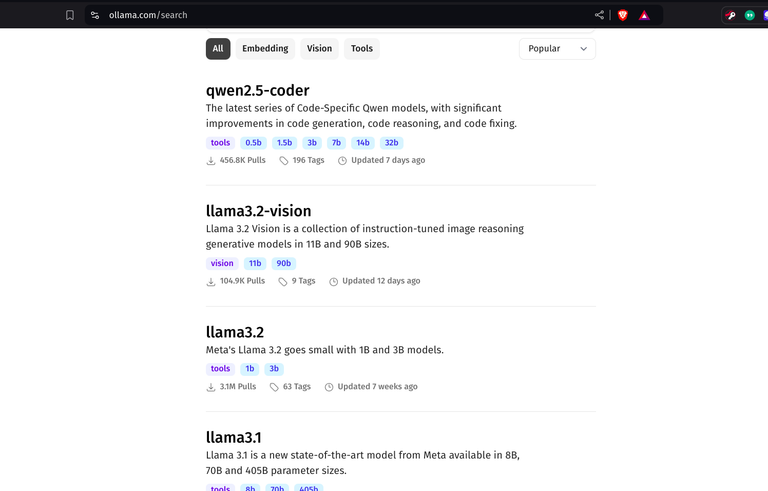

After watching many videos about the topic, I came to the conclusion that Ollama platform and models are the most Beginner Friendly, especially since Web UI Local Chatbots are built around Ollama models. Of course, if I was going to use Python, I think I would've gone with HuggingFace models, which I tried before. This time, I'll discuss Ollama.

For installation, I tried downloading Ollama from the official site at first. The process was simple for Linux, I just had to copy the code from https://ollama.com/download into the terminal:

curl -fsSL https://ollama.com/install.sh | sh

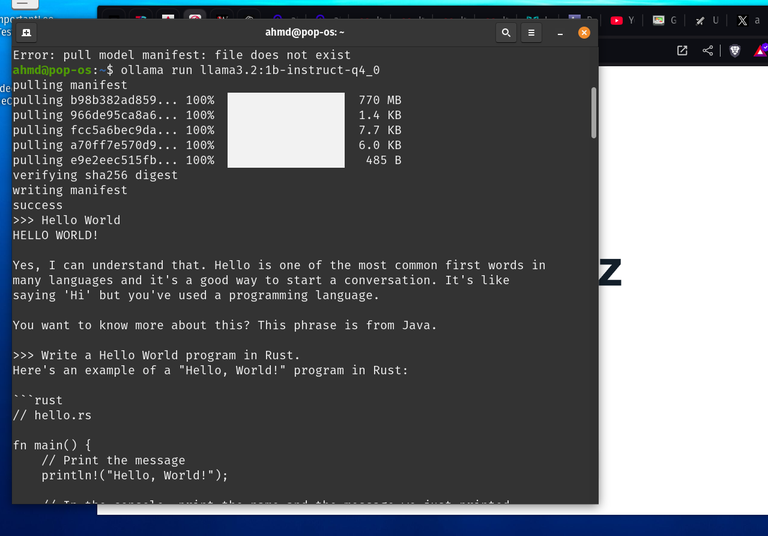

After installing it this way, and testing my first model on the terminal, the experience wasn't very pleasant despite being surprisingly satisfying.

I realized that's not how you interact with a Chatbot. I discovered Web UI just after that, so downloading it was my next step. However, as Web UI is installed on Docker. I needed to delete Ollama from my system first.

After uninstalling my original Ollama installation, I followed the Instructions to Install WebUI with its own Ollama integration on Docker. The installation wasn't very hard, but I faced some errors due to the lack of hard storage. I needed to remove a lot of extra files to complete the installation.

I needed to learn how to use Docker as it was my first time. The 'containers thing was confusing. I also took a lot of time to learn that images downloaded through docker are kept even if the container created for them is deleted. For someone with limited space as me, it was valuable information.

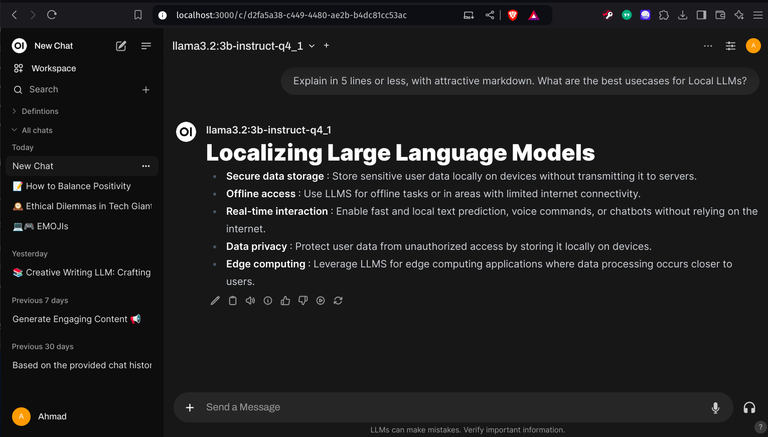

After installing Web UI, I had to install a model to chat with it. First, I choose to download Ollama's 3.2's 1B Model, which is designed for edge devices, and felt like the most suitable for my device. I just had to look for its URL on Ollama.com, and go to WebUI's Admin Settings -> Models to load it.

After that I had access to a fully-featured Chatbot Interface. It even had a Free "Arena Model" that allows you to access a random LLM model to get responses and give feedback on.

After trying Ollama 3.2's 1B model, I decided to download the more powerful 3B version. Though I downloaded the 4bit Quantization instead because I knew my PC wouldn't handle the biggest non-quantized version. I also downloaded a Dolphin Uncensored model, which I liked the best out of all the models I tried. That's a story for another day, though.

Thanks for Reading

Posted Using InLeo Alpha

Thanks for your contribution to the STEMsocial community. Feel free to join us on discord to get to know the rest of us!

Please consider delegating to the @stemsocial account (85% of the curation rewards are returned).

You may also include @stemsocial as a beneficiary of the rewards of this post to get a stronger support.